epoll封装reactor原理剖析示例详解

目录

- reactor是什么?

- reactor模型三个重要组件与流程分析

- 组件

- 流程

- 将epoll封装成reactor事件驱动

- 封装每一个连接sockfd变成ntyevent

- 封装epfd和ntyevent变成ntyreactor

- 封装读、写、接收连接等事件对应的操作变成callback

- 给每个客户端的ntyevent设置属性

- 将ntyevent加入到epoll中由内核监听

- 将ntyevent从epoll中去除

- 读事件回调函数

- 写事件回调函数

- 接受新连接事件回调函数

- reactor运行

- reactor简单版代码与测试

- reactor优点

- reactor多种模型

- 单reactor + 单线程模型

- 单reactor + 线程池(Thread Pool)模型

- 多reactor + 多线程模型

- 多reactor + 线程池(Thread Pool)模型

- 注意点

- reactor完善版代码

reactor是什么?

本文将由浅入深的介绍reactor,深入浅出的封装epoll,一步步变成reactor模型,并在文末介绍reactor的四种模型。

reactor是一种高并发服务器模型,是一种框架,一个概念,所以reactor没有一个固定的代码,可以有很多变种,后续会介绍到。

组成:⾮阻塞的IO(如果是阻塞IO,发送缓冲区满了怎么办,就阻塞了) + io多路复⽤;特征:基于事件循环,以事件驱动或者事件回调的⽅式来实现业务逻辑。

reactor中的IO使用的是select,poll,epoll这些IO多路复用,使用IO多路复用系统不必创建维护大量线程,只使用一个线程、一个选择器就可同时处理成千上万连接,大大减少了系统开销。

reactor中文译为反应堆,将epoll中的IO变成事件驱动,比如读事件,写事件。来了个读事件,立马进行反应,执行提前注册好的事件回调函数。

回想一下普通函数调用的机制:程序调用某函数,函数执行,程序等待,函数将结果和控制权返回给程序,程序继续处理。reactor反应堆,是一种事件驱动机制,和普通函数调用的不同之处在于:应用程序不是主动的调用某个 API 完成处理,而是恰恰相反,reactor逆置了事件处理流程,应用程序需要提供相应的接口并注册到 reactor上,如果相应的事件发生,reactor将主动调用应用程序注册的接口,这些接口又称为“回调函数”。

说白了,reactor就是对epoll进行封装,进行网络IO与业务的解耦,将epoll管理IO变成管理事件,整个程序由事件进行驱动执行。就像下图一样,有就绪事件返回,reactor:由事件驱动执行对应的回调函数;epoll:需要自己判断。

reactor模型三个重要组件与流程分析

reactor是处理并发 I/O 比较常见的一种模式,用于同步 I/O,中心思想是将所有要处理的 I/O 事件注册到一个中心 I/O 多路复用器(epoll)上,同时主线程/进程阻塞在多路复用器上;

一旦有 I/O 事件到来或是准备就绪(文件描述符或 socket 可读、写),多路复用器返回并将事先注册的相应 I/O 事件分发到对应的处理器中。

组件

reactor模型有三个重要的组件

多路复用器:由操作系统提供,在 linux 上一般是 select, poll, epoll 等系统调用。

事件分发器:将多路复用器中返回的就绪事件分到对应的处理函数中。

事件处理器:负责处理特定事件的处理函数。

流程

具体流程:

- 注册相应的事件处理器(刚开始listenfd注册都就绪事件)

- 多路复用器等待事件

- 事件到来,激活事件分发器,分发器调用事件到对应的处理器

- 事件处理器处理事件,然后注册新的事件(比如读事件,完成读操作后,根据业务处理数据,注册写事件,写事件根据业务响应请求;比如listen读事件,肯定要给新的连接注册读事件)

将epoll封装成reactor事件驱动

封装每一个连接sockfd变成ntyevent

我们知道一个连接对应一个文件描述符fd,对于这个连接(fd)来说,它有自己的事件(读,写)。我们将fd都设置成非阻塞的,所以这里我们需要添加两个buffer,至于大小就是看业务需求了。

struct ntyevent {

int fd;//socket fd

int events;//事件

char sbuffer[BUFFER_LENGTH];//写缓冲buffer

int slength;

char rbuffer[BUFFER_LENGTH];//读缓冲buffer

int rlength;

// typedef int (*NtyCallBack)(int, int, void *);

NtyCallBack callback;//回调函数

void *arg;

int status;//1MOD 0 null

};

封装epfd和ntyevent变成ntyreactor

我们知道socket fd已经被封装成了ntyevent,那么有多少个ntyevent呢?这里demo初始化reactor的时候其实是将*events指向了一个1024的ntyevent数组(按照道理来说客户端连接可以一直连,不止1024个客户端,后续文章有解决方案,这里从简)。epfd肯定要封装进行,不用多说。

struct ntyreactor {

int epfd;

struct ntyevent *events;

//struct ntyevent events[1024];

};

封装读、写、接收连接等事件对应的操作变成callback

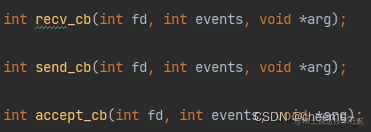

前面已经说了,把事件写成回调函数,这里的参数fd肯定要知道自己的哪个连接,events是什么事件的意思,arg传的是ntyreactor (考虑到后续多线程多进程,如果将ntyreactor设为全局感觉不太好 )

typedef int (*NtyCallBack)(int, int, void *); int recv_cb(int fd, int events, void *arg); int send_cb(int fd, int events, void *arg); int accept_cb(int fd, int events, void *arg);

给每个客户端的ntyevent设置属性

具两个例子,我们知道第一个socket一定是listenfd,用来监听用的,那么首先肯定是设置ntyevent的各项属性。 本来是读事件,读完后要改成写事件,那么必然要把原来的读回调函数设置成写事件回调。

void nty_event_set(struct ntyevent *ev, int fd, NtyCallBack callback, void *arg) {

ev->fd = fd;

ev->callback = callback;

ev->events = 0;

ev->arg = arg;

}

将ntyevent加入到epoll中由内核监听

int nty_event_add(int epfd, int events, struct ntyevent *ntyev) {

struct epoll_event ev = {0, {0}};

ev.data.ptr = ntyev;

ev.events = ntyev->events = events;

int op;

if (ntyev->status == 1) {

op = EPOLL_CTL_MOD;

}

else {

op = EPOLL_CTL_ADD;

ntyev->status = 1;

}

if (epoll_ctl(epfd, op, ntyev->fd, &ev) < 0) {

printf("event add failed [fd=%d], events[%d],err:%s,err:%d\n", ntyev->fd, events, strerror(errno), errno);

return -1;

}

return 0;

}

将ntyevent从epoll中去除

int nty_event_del(int epfd, struct ntyevent *ev) {

struct epoll_event ep_ev = {0, {0}};

if (ev->status != 1) {

return -1;

}

ep_ev.data.ptr = ev;

ev->status = 0;

epoll_ctl(epfd, EPOLL_CTL_DEL, ev->fd, &ep_ev);

//epoll_ctl(epfd, EPOLL_CTL_DEL, ev->fd, NULL);

return 0;

}

读事件回调函数

这里就是被触发的回调函数,具体代码要与业务结合,这里的参考意义不大(这里就是读一次,改成写事件)

int recv_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

struct ntyevent *ntyev = &reactor->events[fd];

int len = recv(fd, ntyev->buffer, BUFFER_LENGTH, 0);

nty_event_del(reactor->epfd, ntyev);

if (len > 0) {

ntyev->length = len;

ntyev->buffer[len] = '\0';

printf("C[%d]:%s\n", fd, ntyev->buffer);

nty_event_set(ntyev, fd, send_cb, reactor);

nty_event_add(reactor->epfd, EPOLLOUT, ntyev);

}

else if (len == 0) {

close(ntyev->fd);

printf("[fd=%d] pos[%ld], closed\n", fd, ntyev - reactor->events);

}

else {

close(ntyev->fd);

printf("recv[fd=%d] error[%d]:%s\n", fd, errno, strerror(errno));

}

return len;

}

写事件回调函数

这里就是被触发的回调函数,具体代码要与业务结合,这里的参考意义不大(将读事件读的数据写回,再改成读事件,相当于echo)

int send_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

struct ntyevent *ntyev = &reactor->events[fd];

int len = send(fd, ntyev->buffer, ntyev->length, 0);

if (len > 0) {

printf("send[fd=%d], [%d]%s\n", fd, len, ntyev->buffer);

nty_event_del(reactor->epfd, ntyev);

nty_event_set(ntyev, fd, recv_cb, reactor);

nty_event_add(reactor->epfd, EPOLLIN, ntyev);

}

else {

close(ntyev->fd);

nty_event_del(reactor->epfd, ntyev);

printf("send[fd=%d] error %s\n", fd, strerror(errno));

}

return len;

}

接受新连接事件回调函数

本质上就是accept,然后将其加入到epoll监听

int accept_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

if (reactor == NULL) return -1;

struct sockaddr_in client_addr;

socklen_t len = sizeof(client_addr);

int clientfd;

if ((clientfd = accept(fd, (struct sockaddr *) &client_addr, &len)) == -1) {

printf("accept: %s\n", strerror(errno));

return -1;

}

printf("client fd = %d\n", clientfd);

if ((fcntl(clientfd, F_SETFL, O_NONBLOCK)) < 0) {

printf("%s: fcntl nonblocking failed, %d\n", __func__, MAX_EPOLL_EVENTS);

return -1;

}

nty_event_set(&reactor->events[clientfd], clientfd, recv_cb, reactor);

nty_event_add(reactor->epfd, EPOLLIN, &reactor->events[clientfd]);

printf("new connect [%s:%d][time:%ld], pos[%d]\n", inet_ntoa(client_addr.sin_addr), ntohs(client_addr.sin_port),

reactor->events[clientfd].last_active, clientfd);

return 0;

}

reactor运行

就是将原来的epoll_wait从main函数中封装到ntyreactor_run函数中

int ntyreactor_run(struct ntyreactor *reactor) {

if (reactor == NULL) return -1;

if (reactor->epfd < 0) return -1;

if (reactor->events == NULL) return -1;

struct epoll_event events[MAX_EPOLL_EVENTS];

int checkpos = 0, i;

while (1) {

int nready = epoll_wait(reactor->epfd, events, MAX_EPOLL_EVENTS, 1000);

if (nready < 0) {

printf("epoll_wait error, exit\n");

continue;

}

for (i = 0; i < nready; i++) {

struct ntyevent *ev = (struct ntyevent *) events[i].data.ptr;

ev->callback(ev->fd, events[i].events, ev->arg);

}

}

}

reactor简单版代码与测试

后续会出一篇测试百万连接数量的文章

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/socket.h>

#include <sys/epoll.h>

#include <arpa/inet.h>

#include <fcntl.h>

#include <unistd.h>

#include <errno.h>

#include <time.h>

#define BUFFER_LENGTH 4096

#define MAX_EPOLL_EVENTS 1024

#define SERVER_PORT 8082

typedef int (*NtyCallBack)(int, int, void *);

struct ntyevent {

int fd;

int events;

void *arg;

NtyCallBack callback;

int status;//1MOD 0 null

char buffer[BUFFER_LENGTH];

int length;

long last_active;

};

struct ntyreactor {

int epfd;

struct ntyevent *events;

};

int recv_cb(int fd, int events, void *arg);

int send_cb(int fd, int events, void *arg);

int accept_cb(int fd, int events, void *arg);

void nty_event_set(struct ntyevent *ev, int fd, NtyCallBack callback, void *arg) {

ev->fd = fd;

ev->callback = callback;

ev->events = 0;

ev->arg = arg;

ev->last_active = time(NULL);

}

int nty_event_add(int epfd, int events, struct ntyevent *ntyev) {

struct epoll_event ev = {0, {0}};

ev.data.ptr = ntyev;

ev.events = ntyev->events = events;

int op;

if (ntyev->status == 1) {

op = EPOLL_CTL_MOD;

}

else {

op = EPOLL_CTL_ADD;

ntyev->status = 1;

}

if (epoll_ctl(epfd, op, ntyev->fd, &ev) < 0) {

printf("event add failed [fd=%d], events[%d],err:%s,err:%d\n", ntyev->fd, events, strerror(errno), errno);

return -1;

}

return 0;

}

int nty_event_del(int epfd, struct ntyevent *ev) {

struct epoll_event ep_ev = {0, {0}};

if (ev->status != 1) {

return -1;

}

ep_ev.data.ptr = ev;

ev->status = 0;

epoll_ctl(epfd, EPOLL_CTL_DEL, ev->fd, &ep_ev);

//epoll_ctl(epfd, EPOLL_CTL_DEL, ev->fd, NULL);

return 0;

}

int recv_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

struct ntyevent *ntyev = &reactor->events[fd];

int len = recv(fd, ntyev->buffer, BUFFER_LENGTH, 0);

nty_event_del(reactor->epfd, ntyev);

if (len > 0) {

ntyev->length = len;

ntyev->buffer[len] = '\0';

printf("C[%d]:%s\n", fd, ntyev->buffer);

nty_event_set(ntyev, fd, send_cb, reactor);

nty_event_add(reactor->epfd, EPOLLOUT, ntyev);

}

else if (len == 0) {

close(ntyev->fd);

printf("[fd=%d] pos[%ld], closed\n", fd, ntyev - reactor->events);

}

else {

close(ntyev->fd);

printf("recv[fd=%d] error[%d]:%s\n", fd, errno, strerror(errno));

}

return len;

}

int send_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

struct ntyevent *ntyev = &reactor->events[fd];

int len = send(fd, ntyev->buffer, ntyev->length, 0);

if (len > 0) {

printf("send[fd=%d], [%d]%s\n", fd, len, ntyev->buffer);

nty_event_del(reactor->epfd, ntyev);

nty_event_set(ntyev, fd, recv_cb, reactor);

nty_event_add(reactor->epfd, EPOLLIN, ntyev);

}

else {

close(ntyev->fd);

nty_event_del(reactor->epfd, ntyev);

printf("send[fd=%d] error %s\n", fd, strerror(errno));

}

return len;

}

int accept_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

if (reactor == NULL) return -1;

struct sockaddr_in client_addr;

socklen_t len = sizeof(client_addr);

int clientfd;

if ((clientfd = accept(fd, (struct sockaddr *) &client_addr, &len)) == -1) {

printf("accept: %s\n", strerror(errno));

return -1;

}

printf("client fd = %d\n", clientfd);

if ((fcntl(clientfd, F_SETFL, O_NONBLOCK)) < 0) {

printf("%s: fcntl nonblocking failed, %d\n", __func__, MAX_EPOLL_EVENTS);

return -1;

}

nty_event_set(&reactor->events[clientfd], clientfd, recv_cb, reactor);

nty_event_add(reactor->epfd, EPOLLIN, &reactor->events[clientfd]);

printf("new connect [%s:%d][time:%ld], pos[%d]\n", inet_ntoa(client_addr.sin_addr), ntohs(client_addr.sin_port),

reactor->events[clientfd].last_active, clientfd);

return 0;

}

int init_sock(short port) {

int fd = socket(AF_INET, SOCK_STREAM, 0);

struct sockaddr_in server_addr;

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = htonl(INADDR_ANY);

server_addr.sin_port = htons(port);

bind(fd, (struct sockaddr *) &server_addr, sizeof(server_addr));

if (listen(fd, 20) < 0) {

printf("listen failed : %s\n", strerror(errno));

}

return fd;

}

int ntyreactor_init(struct ntyreactor *reactor) {

if (reactor == NULL) return -1;

memset(reactor, 0, sizeof(struct ntyreactor));

reactor->epfd = epoll_create(1);

if (reactor->epfd <= 0) {

printf("create epfd in %s err %s\n", __func__, strerror(errno));

return -2;

}

reactor->events = (struct ntyevent *) malloc((MAX_EPOLL_EVENTS) * sizeof(struct ntyevent));

memset(reactor->events, 0, (MAX_EPOLL_EVENTS) * sizeof(struct ntyevent));

if (reactor->events == NULL) {

printf("create epll events in %s err %s\n", __func__, strerror(errno));

close(reactor->epfd);

return -3;

}

return 0;

}

int ntyreactor_destory(struct ntyreactor *reactor) {

close(reactor->epfd);

free(reactor->events);

}

int ntyreactor_addlistener(struct ntyreactor *reactor, int sockfd, NtyCallBack acceptor) {

if (reactor == NULL) return -1;

if (reactor->events == NULL) return -1;

nty_event_set(&reactor->events[sockfd], sockfd, acceptor, reactor);

nty_event_add(reactor->epfd, EPOLLIN, &reactor->events[sockfd]);

return 0;

}

_Noreturn int ntyreactor_run(struct ntyreactor *reactor) {

if (reactor == NULL) return -1;

if (reactor->epfd < 0) return -1;

if (reactor->events == NULL) return -1;

struct epoll_event events[MAX_EPOLL_EVENTS];

int checkpos = 0, i;

while (1) {

//心跳包 60s 超时则断开连接

long now = time(NULL);

for (i = 0; i < 100; i++, checkpos++) {

if (checkpos == MAX_EPOLL_EVENTS) {

checkpos = 0;

}

if (reactor->events[checkpos].status != 1 || checkpos == 3) {

continue;

}

long duration = now - reactor->events[checkpos].last_active;

if (duration >= 60) {

close(reactor->events[checkpos].fd);

printf("[fd=%d] timeout\n", reactor->events[checkpos].fd);

nty_event_del(reactor->epfd, &reactor->events[checkpos]);

}

}

int nready = epoll_wait(reactor->epfd, events, MAX_EPOLL_EVENTS, 1000);

if (nready < 0) {

printf("epoll_wait error, exit\n");

continue;

}

for (i = 0; i < nready; i++) {

struct ntyevent *ev = (struct ntyevent *) events[i].data.ptr;

ev->callback(ev->fd, events[i].events, ev->arg);

}

}

}

int main(int argc, char *argv[]) {

int sockfd = init_sock(SERVER_PORT);

struct ntyreactor *reactor = (struct ntyreactor *) malloc(sizeof(struct ntyreactor));

if (ntyreactor_init(reactor) != 0) {

return -1;

}

ntyreactor_addlistener(reactor, sockfd, accept_cb);

ntyreactor_run(reactor);

ntyreactor_destory(reactor);

close(sockfd);

return 0;

}

reactor优点

reactor模式是编写高性能网络服务器的必备技术之一,它具有如下优点:

- 响应快,不必为单个同步时间所阻塞,虽然 reactor本身依然是同步的

- 编程相对简单,可以最大程度的避免复杂的多线程及同步问题,并且避免了多线程/进程的切换开销

- 可扩展性,可以方便的通过增加 reactor实例个数来充分利用 CPU 资源

- 可复用性,reactor 框架本身与具体事件处理逻辑无关,具有很高的复用性

reactor模型开发效率上比起直接使用 IO 复用要高,它通常是单线程的,设计目标是希望单线程使用一颗 CPU 的全部资源,但也有附带优点,即每个事件处理中很多时候可以不考虑共享资源的互斥访问。可是缺点也是明显的,现在的硬件发展,已经不再遵循摩尔定律,CPU 的频率受制于材料的限制不再有大的提升,而改为是从核数的增加上提升能力,当程序需要使用多核资源时,reactor模型就会悲剧。

如果程序业务很简单,例如只是简单的访问一些提供了并发访问的服务,就可以直接开启多个反应堆,每个反应堆对应一颗 CPU 核心,这些反应堆上跑的请求互不相关,这是完全可以利用多核的。例如 Nginx 这样的 http 静态服务器。

reactor多种模型

单reactor + 单线程模型

单reactor单线程模型,指的是所有的 IO 操作(读,写,建立连接)都在同一个线程上面完成

缺点:

- 由于只有一个线程,因此事件是顺序处理的,一个线程同时只能做一件事情,事件的优先级得不到保证

- 不能充分利用多核CPU

单reactor + 线程池(Thread Pool)模型

相比于单reactor单线程模型,此模型中收到请求后,不在reactor线程计算,而是使用线程池来计算,这会充分的利用多核CPU。

采用此模式时有可能存在多个线程同时计算同一个连接上的多个请求,算出的结果的次序是不确定的, 所以需要网络框架在设计协议时带一个id标示,以便以便让客户端区分response对应的是哪个request。

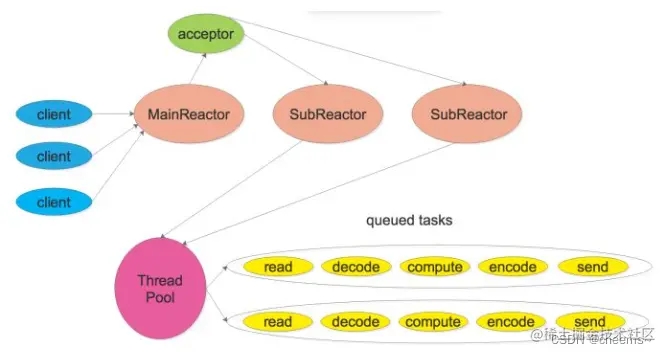

多reactor + 多线程模型

此模式的特点是每个线程一个循环, 有一个main reactor负责accept连接, 然后把该连接挂在某个sub reactor中,这样该连接的所有操作都在那个sub reactor所处的线程中完成。

多个连接可能被分配到多个线程中,充分利用CPU。在应用场景中,reactor的个数可以采用 固定的个数,比如跟CPU数目一致。

此模型与单reactor多线程模型相比,减少了进出thread pool两次上下文切换,小规模的计算可以在当前IO线程完成并且返回结果,降低响应的延迟。

并可以有效防止当IO压力过大时一个reactor处理能力饱和问题。

多reactor + 线程池(Thread Pool)模型

此模型是上面两个的混合体,它既使用多个 reactors 来处理 IO,又使用线程池来处理计算。此模式适适合既有突发IO(利用Multiple Reactor分担),又有突发计算的应用(利用线程池把一个连接上的计算任务分配给多个线程)。

注意点

注意:

前面介绍的四种reactor 模式在具体实现时为了简应该遵循的原则是:每个文件描述符只由一个线程操作。

这样可以轻轻松松解决消息收发的顺序性问题,也避免了关闭文件描述符的各种race condition。一个线程可以操作多个文件描述符,但是一个线程不能操作别的线程拥有的文件描述符。

这一点不难做到。epoll也遵循了相同的原则。Linux文档中并没有说明,当一个线程证阻塞在epoll_wait时,另一个线程往epoll fd添加一个新的监控fd会发生什么。

新fd上的事件会不会在此次epoll_wait调用中返回?为了稳妥起见,我们应该吧对同一个 epoll fd的操作(添加、删除、修改等等)都放到同一个线程中执行。

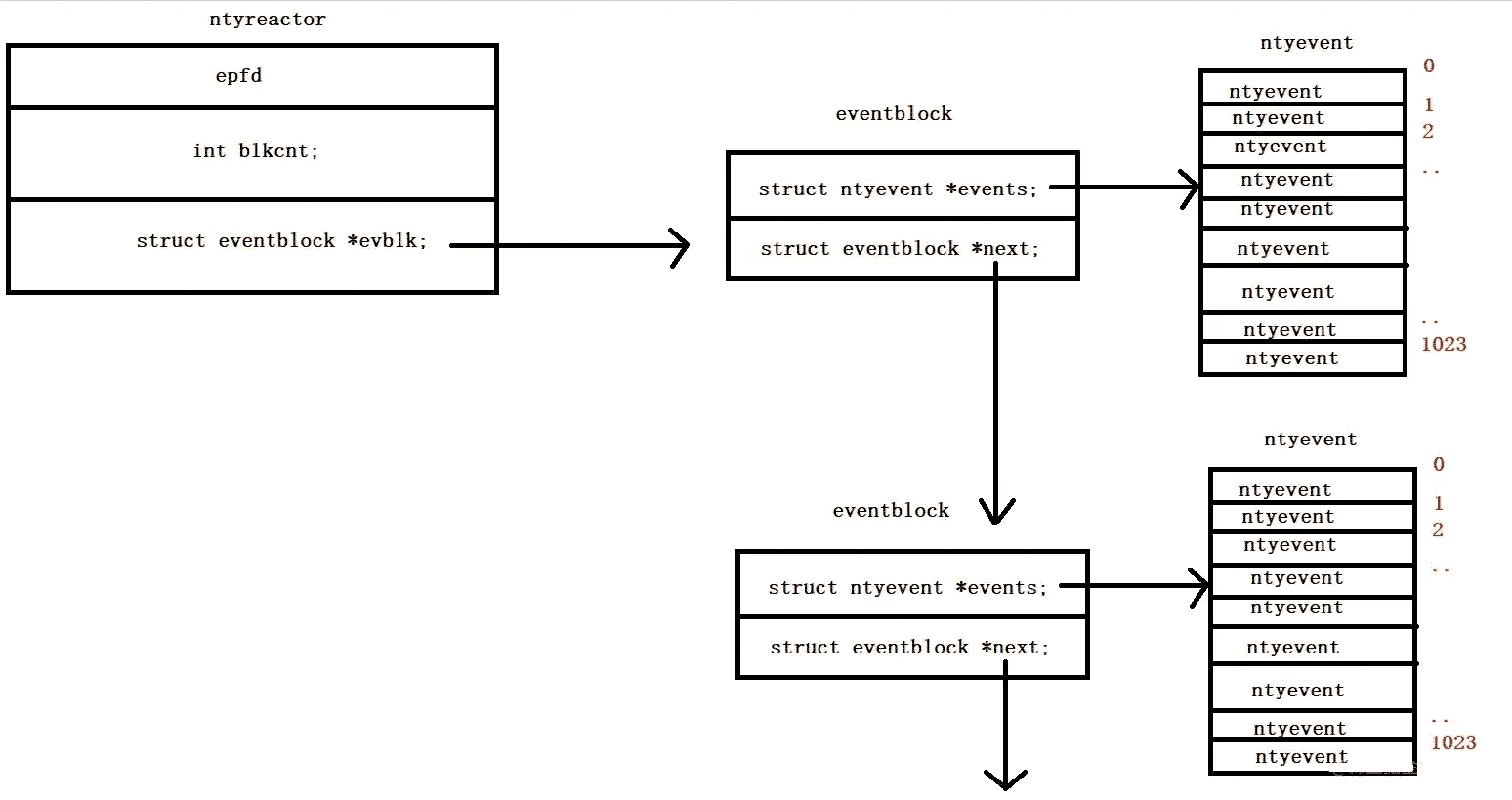

reactor完善版代码

由于fd的数量未知,这里设计ntyreactor 里面包含 eventblock ,eventblock 包含1024个fd。每个fd通过 fd/1024定位到在第几个eventblock,通过fd%1024定位到在eventblock第几个位置。

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/socket.h>

#include <sys/epoll.h>

#include <arpa/inet.h>

#include <fcntl.h>

#include <unistd.h>

#include <errno.h>

#define BUFFER_LENGTH 4096

#define MAX_EPOLL_EVENTS 1024

#define SERVER_PORT 8081

#define PORT_COUNT 100

typedef int (*NCALLBACK)(int, int, void *);

struct ntyevent {

int fd;

int events;

void *arg;

NCALLBACK callback;

int status;

char buffer[BUFFER_LENGTH];

int length;

};

struct eventblock {

struct eventblock *next;

struct ntyevent *events;

};

struct ntyreactor {

int epfd;

int blkcnt;

struct eventblock *evblk;

};

int recv_cb(int fd, int events, void *arg);

int send_cb(int fd, int events, void *arg);

struct ntyevent *ntyreactor_find_event_idx(struct ntyreactor *reactor, int sockfd);

void nty_event_set(struct ntyevent *ev, int fd, NCALLBACK *callback, void *arg) {

ev->fd = fd;

ev->callback = callback;

ev->events = 0;

ev->arg = arg;

}

int nty_event_add(int epfd, int events, struct ntyevent *ev) {

struct epoll_event ep_ev = {0, {0}};

ep_ev.data.ptr = ev;

ep_ev.events = ev->events = events;

int op;

if (ev->status == 1) {

op = EPOLL_CTL_MOD;

}

else {

op = EPOLL_CTL_ADD;

ev->status = 1;

}

if (epoll_ctl(epfd, op, ev->fd, &ep_ev) < 0) {

printf("event add failed [fd=%d], events[%d]\n", ev->fd, events);

return -1;

}

return 0;

}

int nty_event_del(int epfd, struct ntyevent *ev) {

struct epoll_event ep_ev = {0, {0}};

if (ev->status != 1) {

return -1;

}

ep_ev.data.ptr = ev;

ev->status = 0;

epoll_ctl(epfd, EPOLL_CTL_DEL, ev->fd, &ep_ev);

return 0;

}

int recv_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

struct ntyevent *ev = ntyreactor_find_event_idx(reactor, fd);

int len = recv(fd, ev->buffer, BUFFER_LENGTH, 0); //

nty_event_del(reactor->epfd, ev);

if (len > 0) {

ev->length = len;

ev->buffer[len] = '\0';

// printf("recv[%d]:%s\n", fd, ev->buffer);

printf("recv fd=[%d\n", fd);

nty_event_set(ev, fd, send_cb, reactor);

nty_event_add(reactor->epfd, EPOLLOUT, ev);

}

else if (len == 0) {

close(ev->fd);

//printf("[fd=%d] pos[%ld], closed\n", fd, ev-reactor->events);

}

else {

close(ev->fd);

// printf("recv[fd=%d] error[%d]:%s\n", fd, errno, strerror(errno));

}

return len;

}

int send_cb(int fd, int events, void *arg) {

struct ntyreactor *reactor = (struct ntyreactor *) arg;

struct ntyevent *ev = ntyreactor_find_event_idx(reactor, fd);

int len = send(fd, ev->buffer, ev->length, 0);

if (len > 0) {

// printf("send[fd=%d], [%d]%s\n", fd, len, ev->buffer);

printf("send fd=[%d\n]", fd);

nty_event_del(reactor->epfd, ev);

nty_event_set(ev, fd, recv_cb, reactor);

nty_event_add(reactor->epfd, EPOLLIN, ev);

}

else {

nty_event_del(reactor->epfd, ev);

close(ev->fd);

printf("send[fd=%d] error %s\n", fd, strerror(errno));

}

return len;

}

int accept_cb(int fd, int events, void *arg) {//非阻塞

struct ntyreactor *reactor = (struct ntyreactor *) arg;

if (reactor == NULL) return -1;

struct sockaddr_in client_addr;

socklen_t len = sizeof(client_addr);

int clientfd;

if ((clientfd = accept(fd, (struct sockaddr *) &client_addr, &len)) == -1) {

printf("accept: %s\n", strerror(errno));

return -1;

}

if ((fcntl(clientfd, F_SETFL, O_NONBLOCK)) < 0) {

printf("%s: fcntl nonblocking failed, %d\n", __func__, MAX_EPOLL_EVENTS);

return -1;

}

struct ntyevent *event = ntyreactor_find_event_idx(reactor, clientfd);

nty_event_set(event, clientfd, recv_cb, reactor);

nty_event_add(reactor->epfd, EPOLLIN, event);

printf("new connect [%s:%d], pos[%d]\n",

inet_ntoa(client_addr.sin_addr), ntohs(client_addr.sin_port), clientfd);

return 0;

}

int init_sock(short port) {

int fd = socket(AF_INET, SOCK_STREAM, 0);

fcntl(fd, F_SETFL, O_NONBLOCK);

struct sockaddr_in server_addr;

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = htonl(INADDR_ANY);

server_addr.sin_port = htons(port);

bind(fd, (struct sockaddr *) &server_addr, sizeof(server_addr));

if (listen(fd, 20) < 0) {

printf("listen failed : %s\n", strerror(errno));

}

return fd;

}

int ntyreactor_alloc(struct ntyreactor *reactor) {

if (reactor == NULL) return -1;

if (reactor->evblk == NULL) return -1;

struct eventblock *blk = reactor->evblk;

while (blk->next != NULL) {

blk = blk->next;

}

struct ntyevent *evs = (struct ntyevent *) malloc((MAX_EPOLL_EVENTS) * sizeof(struct ntyevent));

if (evs == NULL) {

printf("ntyreactor_alloc ntyevents failed\n");

return -2;

}

memset(evs, 0, (MAX_EPOLL_EVENTS) * sizeof(struct ntyevent));

struct eventblock *block = (struct eventblock *) malloc(sizeof(struct eventblock));

if (block == NULL) {

printf("ntyreactor_alloc eventblock failed\n");

return -2;

}

memset(block, 0, sizeof(struct eventblock));

block->events = evs;

block->next = NULL;

blk->next = block;

reactor->blkcnt++; //

return 0;

}

struct ntyevent *ntyreactor_find_event_idx(struct ntyreactor *reactor, int sockfd) {

int blkidx = sockfd / MAX_EPOLL_EVENTS;

while (blkidx >= reactor->blkcnt) {

ntyreactor_alloc(reactor);

}

int i = 0;

struct eventblock *blk = reactor->evblk;

while (i++ < blkidx && blk != NULL) {

blk = blk->next;

}

return &blk->events[sockfd % MAX_EPOLL_EVENTS];

}

int ntyreactor_init(struct ntyreactor *reactor) {

if (reactor == NULL) return -1;

memset(reactor, 0, sizeof(struct ntyreactor));

reactor->epfd = epoll_create(1);

if (reactor->epfd <= 0) {

printf("create epfd in %s err %s\n", __func__, strerror(errno));

return -2;

}

struct ntyevent *evs = (struct ntyevent *) malloc((MAX_EPOLL_EVENTS) * sizeof(struct ntyevent));

if (evs == NULL) {

printf("ntyreactor_alloc ntyevents failed\n");

return -2;

}

memset(evs, 0, (MAX_EPOLL_EVENTS) * sizeof(struct ntyevent));

struct eventblock *block = (struct eventblock *) malloc(sizeof(struct eventblock));

if (block == NULL) {

printf("ntyreactor_alloc eventblock failed\n");

return -2;

}

memset(block, 0, sizeof(struct eventblock));

block->events = evs;

block->next = NULL;

reactor->evblk = block;

reactor->blkcnt = 1;

return 0;

}

int ntyreactor_destory(struct ntyreactor *reactor) {

close(reactor->epfd);

//free(reactor->events);

struct eventblock *blk = reactor->evblk;

struct eventblock *blk_next = NULL;

while (blk != NULL) {

blk_next = blk->next;

free(blk->events);

free(blk);

blk = blk_next;

}

return 0;

}

int ntyreactor_addlistener(struct ntyreactor *reactor, int sockfd, NCALLBACK *acceptor) {

if (reactor == NULL) return -1;

if (reactor->evblk == NULL) return -1;

struct ntyevent *event = ntyreactor_find_event_idx(reactor, sockfd);

nty_event_set(event, sockfd, acceptor, reactor);

nty_event_add(reactor->epfd, EPOLLIN, event);

return 0;

}

_Noreturn int ntyreactor_run(struct ntyreactor *reactor) {

if (reactor == NULL) return -1;

if (reactor->epfd < 0) return -1;

if (reactor->evblk == NULL) return -1;

struct epoll_event events[MAX_EPOLL_EVENTS + 1];

int i;

while (1) {

int nready = epoll_wait(reactor->epfd, events, MAX_EPOLL_EVENTS, 1000);

if (nready < 0) {

printf("epoll_wait error, exit\n");

continue;

}

for (i = 0; i < nready; i++) {

struct ntyevent *ev = (struct ntyevent *) events[i].data.ptr;

if ((events[i].events & EPOLLIN) && (ev->events & EPOLLIN)) {

ev->callback(ev->fd, events[i].events, ev->arg);

}

if ((events[i].events & EPOLLOUT) && (ev->events & EPOLLOUT)) {

ev->callback(ev->fd, events[i].events, ev->arg);

}

}

}

}

// <remoteip, remoteport, localip, localport,protocol>

int main(int argc, char *argv[]) {

unsigned short port = SERVER_PORT; // listen 8081

if (argc == 2) {

port = atoi(argv[1]);

}

struct ntyreactor *reactor = (struct ntyreactor *) malloc(sizeof(struct ntyreactor));

ntyreactor_init(reactor);

int i = 0;

int sockfds[PORT_COUNT] = {0};

for (i = 0; i < PORT_COUNT; i++) {

sockfds[i] = init_sock(port + i);

ntyreactor_addlistener(reactor, sockfds[i], accept_cb);

}

ntyreactor_run(reactor);

ntyreactor_destory(reactor);

for (i = 0; i < PORT_COUNT; i++) {

close(sockfds[i]);

}

free(reactor);

return 0;

}

以上就是epoll封装reactor原理剖析示例详解的详细内容,更多关于epoll封装reactor的资料请关注我们其它相关文章!