Python中ROS和OpenCV结合处理图像问题

目录

- 一、安装ROS-OpenCV

- 二、简单案例分析

- 1.usb_cam.launch

- 2.cv_bridge_test.py

- 3.rqt_image_view

- 三、CvBridge相关API

- 1.imgmsg_to_cv2()

- 2.cv2_to_imgmsg()

- 四、利用ROS+OpenCV实现人脸检测案例

- 1.usb_cam.launch

- 2.face_detector.launch

- 2.1 launch

- 2.2 face_detector.py

- 2.3 两个xml文件

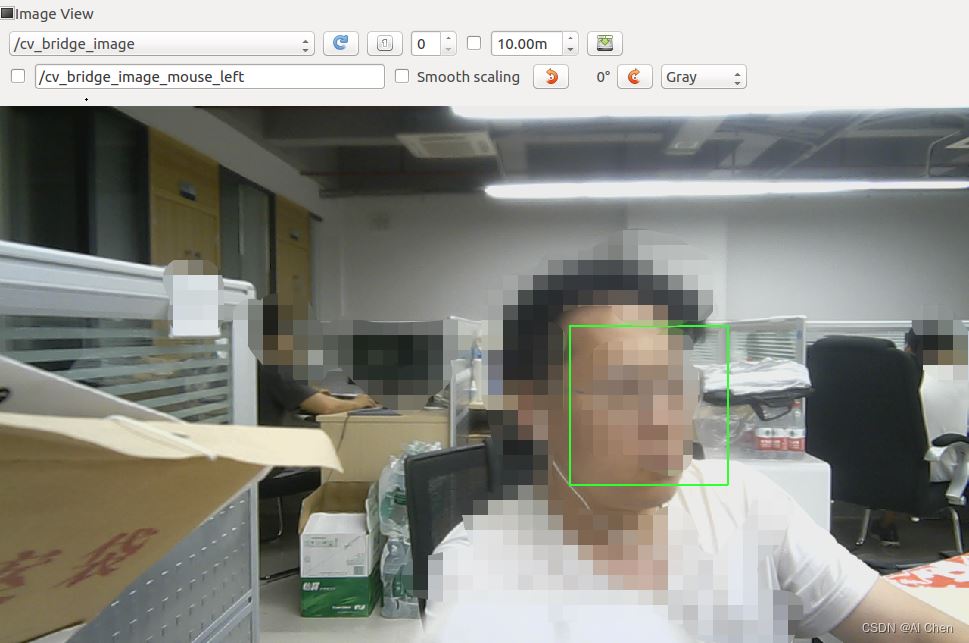

- 3.rqt_image_view

- 五、利用ROS+OpenCV实现帧差法物体追踪

- 1.usb_cam.launch

- 2.motion_detector.launch

- 2.1 launch

- 2.2 motion_detector.py

- 3.rqt_image_view

一、安装ROS-OpenCV

安装OpenCVsudo apt-get install ros-kinetic-vision-opencv libopencv-dev python-opencv

ROS进行图像处理是依赖于OpenCV库的。ROS通过一个叫CvBridge的功能包,将获取的图像数据转换成OpenCV的格式,OpenCV处理之后,传回给ROS进行图像显示(应用),如下图:

二、简单案例分析

我们使用ROS驱动获取摄像头数据,将ROS获得的数据通过CvBridge转换成OpenCV需要的格式,调用OpenCV的算法库对这个图片进行处理(如画一个圆),然后返回给ROS进行rviz显示。

1.usb_cam.launch

首先我们建立一个launch文件,可以调用摄像头驱动获取图像数据。运行launch文件roslaunch xxx(功能包名) usb_cam.launch

<launch>

<node name="usb_cam" pkg="usb_cam" type="usb_cam_node" output="screen" >

<param name="video_device" value="/dev/video0" />

<param name="image_width" value="1280" />

<param name="image_height" value="720" />

<param name="pixel_format" value="yuyv" />

<param name="camera_frame_id" value="usb_cam" />

<param name="io_method" value="mmap"/>

</node>

</launch>

2.cv_bridge_test.py

建立一个py文件,是python2的。实现接收ROS发的图像信息,在图像上画一个圆后,返回给ROS。返回的话题名称是cv_bridge_image。运行py文件rosrun xxx(功能包名) cv_bridge_test.py

如果出现权限不够的情况,记得切换到py文件目录下执行:sudo chmod +x *.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

import cv2

from cv_bridge import CvBridge, CvBridgeError

from sensor_msgs.msg import Image

class image_converter:

def __init__(self):

# 创建cv_bridge,声明图像的发布者和订阅者

self.image_pub = rospy.Publisher("cv_bridge_image", Image, queue_size=1)

self.bridge = CvBridge()

self.image_sub = rospy.Subscriber("/usb_cam/image_raw", Image, self.callback)

def callback(self,data):

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8")

except CvBridgeError as e:

print e

# 在opencv的显示窗口中绘制一个圆,作为标记

(rows,cols,channels) = cv_image.shape

if cols > 60 and rows > 60 :

cv2.circle(cv_image, (60, 60), 30, (0,0,255), -1)

# 显示Opencv格式的图像

cv2.imshow("Image window", cv_image)

cv2.waitKey(3)

# 再将opencv格式额数据转换成ros image格式的数据发布

try:

self.image_pub.publish(self.bridge.cv2_to_imgmsg(cv_image, "bgr8"))

except CvBridgeError as e:

print e

if __name__ == '__main__':

try:

# 初始化ros节点

rospy.init_node("cv_bridge_test")

rospy.loginfo("Starting cv_bridge_test node")

image_converter()

rospy.spin()

except KeyboardInterrupt:

print "Shutting down cv_bridge_test node."

cv2.destroyAllWindows()

3.rqt_image_view

在终端下执行rqt_image_view,订阅cv_bridge_image话题,可以发现OpenCV处理之后的图像在ROS中显示出来。

三、CvBridge相关API

1.imgmsg_to_cv2()

将ROS图像消息转换成OpenCV图像数据;

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8")

except CvBridgeError as e:

print e

2.cv2_to_imgmsg()

将OpenCV格式的图像数据转换成ROS图像消息;

# 再将opencv格式额数据转换成ros image格式的数据发布

try:

self.image_pub.publish(self.bridge.cv2_to_imgmsg(cv_image, "bgr8"))

except CvBridgeError as e:

print e

四、利用ROS+OpenCV实现人脸检测案例

1.usb_cam.launch

这个launch和上一个案例一样先打开摄像头驱动获取图像数据。运行launch文件roslaunch xxx(功能包名) usb_cam.launch

<launch>

<node name="usb_cam" pkg="usb_cam" type="usb_cam_node" output="screen" >

<param name="video_device" value="/dev/video0" />

<param name="image_width" value="1280" />

<param name="image_height" value="720" />

<param name="pixel_format" value="yuyv" />

<param name="camera_frame_id" value="usb_cam" />

<param name="io_method" value="mmap"/>

</node>

</launch>

2.face_detector.launch

人脸检测算法采用基于Harr特征的级联分类器对象检测算法,检测效果并不佳。但是这里只是为了演示如何使用ROS和OpenCV进行图像处理,所以不必在乎算法本身效果。整个launch调用了一个py文件和两个xml文件,分别如下:

2.1 launch

<launch>

<node pkg="robot_vision" name="face_detector" type="face_detector.py" output="screen">

<remap from="input_rgb_image" to="/usb_cam/image_raw" />

<rosparam>

haar_scaleFactor: 1.2

haar_minNeighbors: 2

haar_minSize: 40

haar_maxSize: 60

</rosparam>

<param name="cascade_1" value="$(find robot_vision)/data/haar_detectors/haarcascade_frontalface_alt.xml" />

<param name="cascade_2" value="$(find robot_vision)/data/haar_detectors/haarcascade_profileface.xml" />

</node>

</launch>

2.2 face_detector.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

import cv2

import numpy as np

from sensor_msgs.msg import Image, RegionOfInterest

from cv_bridge import CvBridge, CvBridgeError

class faceDetector:

def __init__(self):

rospy.on_shutdown(self.cleanup);

# 创建cv_bridge

self.bridge = CvBridge()

self.image_pub = rospy.Publisher("cv_bridge_image", Image, queue_size=1)

# 获取haar特征的级联表的XML文件,文件路径在launch文件中传入

cascade_1 = rospy.get_param("~cascade_1", "")

cascade_2 = rospy.get_param("~cascade_2", "")

# 使用级联表初始化haar特征检测器

self.cascade_1 = cv2.CascadeClassifier(cascade_1)

self.cascade_2 = cv2.CascadeClassifier(cascade_2)

# 设置级联表的参数,优化人脸识别,可以在launch文件中重新配置

self.haar_scaleFactor = rospy.get_param("~haar_scaleFactor", 1.2)

self.haar_minNeighbors = rospy.get_param("~haar_minNeighbors", 2)

self.haar_minSize = rospy.get_param("~haar_minSize", 40)

self.haar_maxSize = rospy.get_param("~haar_maxSize", 60)

self.color = (50, 255, 50)

# 初始化订阅rgb格式图像数据的订阅者,此处图像topic的话题名可以在launch文件中重映射

self.image_sub = rospy.Subscriber("input_rgb_image", Image, self.image_callback, queue_size=1)

def image_callback(self, data):

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8")

frame = np.array(cv_image, dtype=np.uint8)

except CvBridgeError, e:

print e

# 创建灰度图像

grey_image = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# 创建平衡直方图,减少光线影响

grey_image = cv2.equalizeHist(grey_image)

# 尝试检测人脸

faces_result = self.detect_face(grey_image)

# 在opencv的窗口中框出所有人脸区域

if len(faces_result)>0:

for face in faces_result:

x, y, w, h = face

cv2.rectangle(cv_image, (x, y), (x+w, y+h), self.color, 2)

# 将识别后的图像转换成ROS消息并发布

self.image_pub.publish(self.bridge.cv2_to_imgmsg(cv_image, "bgr8"))

def detect_face(self, input_image):

# 首先匹配正面人脸的模型

if self.cascade_1:

faces = self.cascade_1.detectMultiScale(input_image,

self.haar_scaleFactor,

self.haar_minNeighbors,

cv2.CASCADE_SCALE_IMAGE,

(self.haar_minSize, self.haar_maxSize))

# 如果正面人脸匹配失败,那么就尝试匹配侧面人脸的模型

if len(faces) == 0 and self.cascade_2:

faces = self.cascade_2.detectMultiScale(input_image,

self.haar_scaleFactor,

self.haar_minNeighbors,

cv2.CASCADE_SCALE_IMAGE,

(self.haar_minSize, self.haar_maxSize))

return faces

def cleanup(self):

print "Shutting down vision node."

cv2.destroyAllWindows()

if __name__ == '__main__':

try:

# 初始化ros节点

rospy.init_node("face_detector")

faceDetector()

rospy.loginfo("Face detector is started..")

rospy.loginfo("Please subscribe the ROS image.")

rospy.spin()

except KeyboardInterrupt:

print "Shutting down face detector node."

cv2.destroyAllWindows()

2.3 两个xml文件

链接

3.rqt_image_view

运行完上述两个launch文件后,在终端下执行rqt_image_view,订阅cv_bridge_image话题,可以发现OpenCV处理之后的图像在ROS中显示出来。

五、利用ROS+OpenCV实现帧差法物体追踪

1.usb_cam.launch

这个launch和前两个案例一样先打开摄像头驱动获取图像数据。运行launch文件roslaunch xxx(功能包名) usb_cam.launch

<launch>

<node name="usb_cam" pkg="usb_cam" type="usb_cam_node" output="screen" >

<param name="video_device" value="/dev/video0" />

<param name="image_width" value="1280" />

<param name="image_height" value="720" />

<param name="pixel_format" value="yuyv" />

<param name="camera_frame_id" value="usb_cam" />

<param name="io_method" value="mmap"/>

</node>

</launch>

2.motion_detector.launch

物体追踪方法采用帧差法,追踪效果并不佳。但是这里只是为了演示如何使用ROS和OpenCV进行图像处理,所以不必在乎算法本身效果。整个launch调用了一个py文件,如下:

2.1 launch

<launch>

<node pkg="robot_vision" name="motion_detector" type="motion_detector.py" output="screen">

<remap from="input_rgb_image" to="/usb_cam/image_raw" />

<rosparam>

minArea: 500

threshold: 25

</rosparam>

</node>

</launch>

2.2 motion_detector.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

import cv2

import numpy as np

from sensor_msgs.msg import Image, RegionOfInterest

from cv_bridge import CvBridge, CvBridgeError

class motionDetector:

def __init__(self):

rospy.on_shutdown(self.cleanup);

# 创建cv_bridge

self.bridge = CvBridge()

self.image_pub = rospy.Publisher("cv_bridge_image", Image, queue_size=1)

# 设置参数:最小区域、阈值

self.minArea = rospy.get_param("~minArea", 500)

self.threshold = rospy.get_param("~threshold", 25)

self.firstFrame = None

self.text = "Unoccupied"

# 初始化订阅rgb格式图像数据的订阅者,此处图像topic的话题名可以在launch文件中重映射

self.image_sub = rospy.Subscriber("input_rgb_image", Image, self.image_callback, queue_size=1)

def image_callback(self, data):

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8")

frame = np.array(cv_image, dtype=np.uint8)

except CvBridgeError, e:

print e

# 创建灰度图像

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (21, 21), 0)

# 使用两帧图像做比较,检测移动物体的区域

if self.firstFrame is None:

self.firstFrame = gray

return

frameDelta = cv2.absdiff(self.firstFrame, gray)

thresh = cv2.threshold(frameDelta, self.threshold, 255, cv2.THRESH_BINARY)[1]

thresh = cv2.dilate(thresh, None, iterations=2)

binary, cnts, hierarchy= cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for c in cnts:

# 如果检测到的区域小于设置值,则忽略

if cv2.contourArea(c) < self.minArea:

continue

# 在输出画面上框出识别到的物体

(x, y, w, h) = cv2.boundingRect(c)

cv2.rectangle(frame, (x, y), (x + w, y + h), (50, 255, 50), 2)

self.text = "Occupied"

# 在输出画面上打当前状态和时间戳信息

cv2.putText(frame, "Status: {}".format(self.text), (10, 20),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2)

# 将识别后的图像转换成ROS消息并发布

self.image_pub.publish(self.bridge.cv2_to_imgmsg(frame, "bgr8"))

def cleanup(self):

print "Shutting down vision node."

cv2.destroyAllWindows()

if __name__ == '__main__':

try:

# 初始化ros节点

rospy.init_node("motion_detector")

rospy.loginfo("motion_detector node is started...")

rospy.loginfo("Please subscribe the ROS image.")

motionDetector()

rospy.spin()

except KeyboardInterrupt:

print "Shutting down motion detector node."

cv2.destroyAllWindows()

3.rqt_image_view

运行完上述两个launch文件后,在终端下执行rqt_image_view,订阅cv_bridge_image话题,可以发现OpenCV处理之后的图像在ROS中显示出来。(鉴于我的测试环境比较糟糕,并且这个算法本身精度不高,就不展示最终效果了)

到此这篇关于Python中ROS和OpenCV结合处理图像问题的文章就介绍到这了,更多相关ROS和OpenCV处理图像内容请搜索我们以前的文章或继续浏览下面的相关文章希望大家以后多多支持我们!