Python利用networkx画图绘制Les Misérables人物关系

目录

- 数据集介绍

- 数据处理

- 画图

- networkx自带的数据集

- 完整代码

数据集介绍

《悲惨世界》中的人物关系图,图中共77个节点、254条边。

数据集截图:

打开README文件:

Les Misérables network, part of the Koblenz Network Collection

===========================================================================

This directory contains the TSV and related files of the moreno_lesmis network: This undirected network contains co-occurances of characters in Victor Hugo's novel 'Les Misérables'. A node represents a character and an edge between two nodes shows that these two characters appeared in the same chapter of the the book. The weight of each link indicates how often such a co-appearance occured.

More information about the network is provided here:

http://konect.cc/networks/moreno_lesmis

Files:

meta.moreno_lesmis -- Metadata about the network

out.moreno_lesmis -- The adjacency matrix of the network in whitespace-separated values format, with one edge per line

The meaning of the columns in out.moreno_lesmis are:

First column: ID of from node

Second column: ID of to node

Third column (if present): weight or multiplicity of edge

Fourth column (if present): timestamp of edges Unix time

Third column: edge weight

Use the following References for citation:

@MISC{konect:2017:moreno_lesmis,

title = {Les Misérables network dataset -- {KONECT}},

month = oct,

year = {2017},

url = {http://konect.cc/networks/moreno_lesmis}

}

@book{konect:knuth1993,

title = {The {Stanford} {GraphBase}: A Platform for Combinatorial Computing},

author = {Knuth, Donald Ervin},

volume = {37},

year = {1993},

publisher = {Addison-Wesley Reading},

}

@book{konect:knuth1993,

title = {The {Stanford} {GraphBase}: A Platform for Combinatorial Computing},

author = {Knuth, Donald Ervin},

volume = {37},

year = {1993},

publisher = {Addison-Wesley Reading},

}

@inproceedings{konect,

title = {{KONECT} -- {The} {Koblenz} {Network} {Collection}},

author = {Jérôme Kunegis},

year = {2013},

booktitle = {Proc. Int. Conf. on World Wide Web Companion},

pages = {1343--1350},

url = {http://dl.acm.org/citation.cfm?id=2488173},

url_presentation = {https://www.slideshare.net/kunegis/presentationwow},

url_web = {http://konect.cc/},

url_citations = {https://scholar.google.com/scholar?cites=7174338004474749050},

}

@inproceedings{konect,

title = {{KONECT} -- {The} {Koblenz} {Network} {Collection}},

author = {Jérôme Kunegis},

year = {2013},

booktitle = {Proc. Int. Conf. on World Wide Web Companion},

pages = {1343--1350},

url = {http://dl.acm.org/citation.cfm?id=2488173},

url_presentation = {https://www.slideshare.net/kunegis/presentationwow},

url_web = {http://konect.cc/},

url_citations = {https://scholar.google.com/scholar?cites=7174338004474749050},

}

从中可以得知:该图是一个无向图,节点表示《悲惨世界》中的人物,两个节点之间的边表示这两个人物出现在书的同一章,边的权重表示两个人物(节点)出现在同一章中的频率。

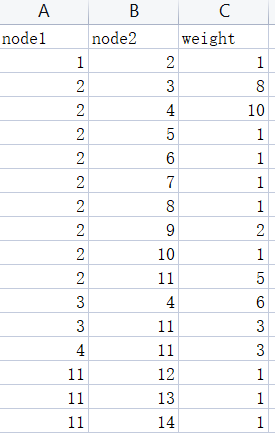

真正的数据在out.moreno_lesmis_lesmis中,打开并另存为csv文件:

数据处理

networkx中对无向图的初始化代码为:

g = nx.Graph()

g.add_nodes_from([i for i in range(1, 78)])

g.add_edges_from([(1, 2, {'weight': 1})])

节点的初始化很容易解决,我们主要解决边的初始化:先将dataframe转为列表,然后将其中每个元素转为元组。

df = pd.read_csv('out.csv')

res = df.values.tolist()

for i in range(len(res)):

res[i][2] = dict({'weight': res[i][2]})

res = [tuple(x) for x in res]

print(res)

res输出如下(部分):

[(1, 2, {'weight': 1}), (2, 3, {'weight': 8}), (2, 4, {'weight': 10}), (2, 5, {'weight': 1}), (2, 6, {'weight': 1}), (2, 7, {'weight': 1}), (2, 8, {'weight': 1})...]

因此图的初始化代码为:

g = nx.Graph() g.add_nodes_from([i for i in range(1, 78)]) g.add_edges_from(res)

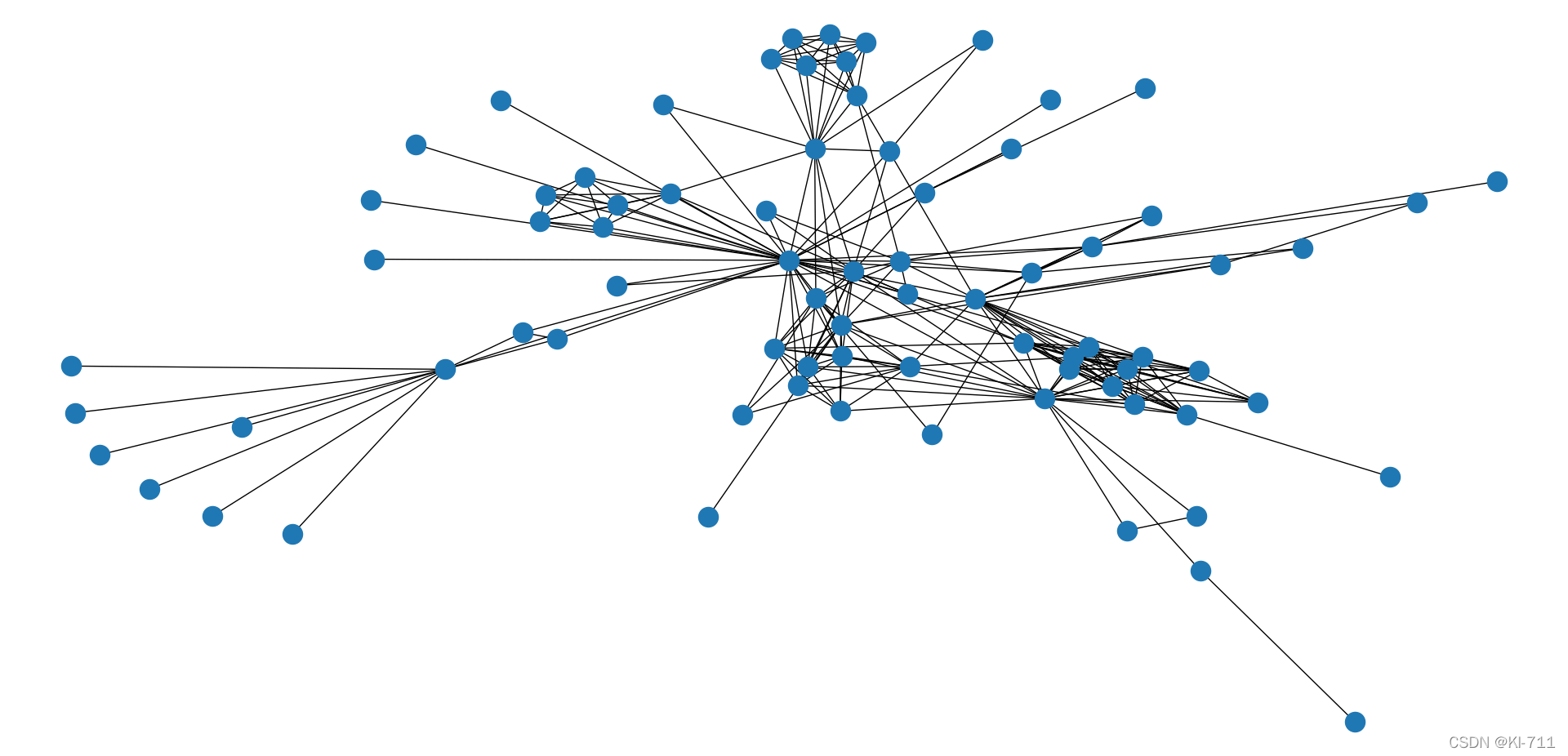

画图

nx.draw(g) plt.show()

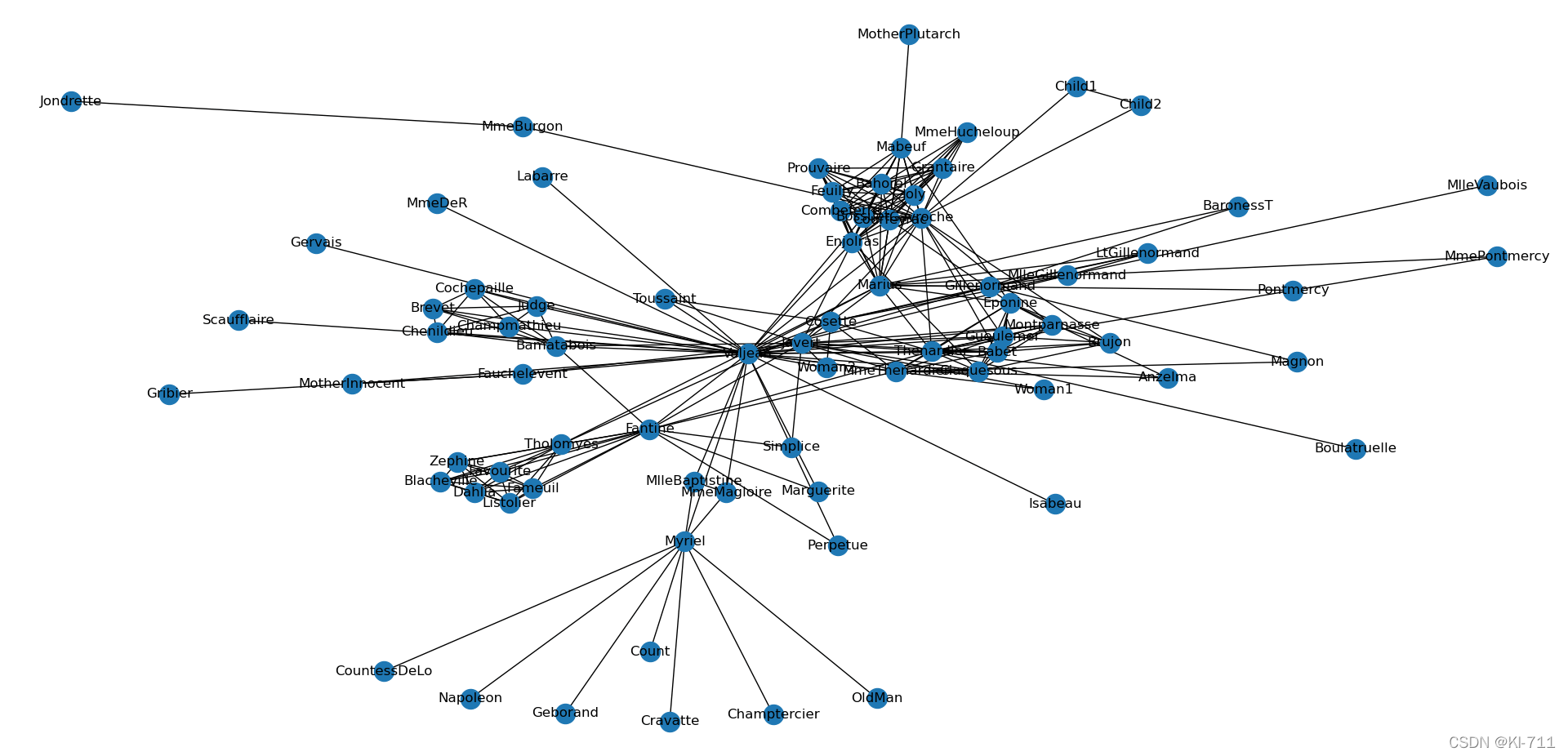

networkx自带的数据集

忙活了半天发现networkx有自带的数据集,其中就有悲惨世界的人物关系图:

g = nx.les_miserables_graph() nx.draw(g, with_labels=True) plt.show()

完整代码

# -*- coding: utf-8 -*-

import networkx as nx

import matplotlib.pyplot as plt

import pandas as pd

# 77 254

df = pd.read_csv('out.csv')

res = df.values.tolist()

for i in range(len(res)):

res[i][2] = dict({'weight': res[i][2]})

res = [tuple(x) for x in res]

print(res)

# 初始化图

g = nx.Graph()

g.add_nodes_from([i for i in range(1, 78)])

g.add_edges_from(res)

g = nx.les_miserables_graph()

nx.draw(g, with_labels=True)

plt.show()

以上就是Python利用networkx画图绘制Les Misérables人物关系的详细内容,更多关于Python networkx画图绘制的资料请关注我们其它相关文章!

赞 (0)