docker空间爆满导致的进入容器失败的解决方案

由于问题发生的比较突然,业务催的比较急,所以没来得及截图案发现场,所以现场截图为后期正常的环境。

周一上班,照例进入服务器,进入docker容器

>>> docker exec -i -t xxx /bin/bash Docker error : no space left on device

没空间了?

立马想到查看系统的空间

>>> df -h

Filesystem Size Used Avail Use% Mounted on devtmpfs 3.9G 0 3.9G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 984K 3.9G 1% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/vda1 50G 50G 0G 100% / overlay 50G 5.9G 41G 13% /var/lib/docker/overlay2/4d0941b78fa413f3b77111735e06045b41351748bcea7964205bcfbf9d4ec0b6/merged overlay 50G 5.9G 41G 13% /var/lib/docker/overlay2/54a7a7b66d9c12d7e42158d177a6b67321f7da1f223b301e838e9bc109a2bda4/merged shm 64M 0 64M 0% /var/lib/docker/containers/712f65baea85d898f6c948e7149f84f2f5eaf3b1934540603f32ab278f9acec4/mounts/shm shm 64M 0 64M 0% /var/lib/docker/containers/a5fa673086c8f46ba98fc4425b353ed2e12de3277a5fe2dc5b8b7affa63b4518/mounts/shm overlay 50G 5.9G 41G 13% /var/lib/docker/overlay2/7fbbc8a29119a1eaa1f212c50b75405a1f16fd68e3ae3949cc0c963d0727a9ab/merged shm 64M 0 64M 0% /var/lib/docker/containers/011a83deceacecbacb4ef7eb06eb5b812babf9e83914a4fb33d4925cc1ad375b/mounts/shm tmpfs 783M 0 783M 0% /run/user/0

原来是根目录满了

进一步查找

>>> cd / >>> du -h --max-depth=1

984K ./run 16K ./opt 13M ./root 4.0K ./media du: cannot access ‘./proc/4382/task/4382/fd/4': No such file or directory du: cannot access ‘./proc/4382/task/4382/fdinfo/4': No such file or directory du: cannot access ‘./proc/4382/fd/3': No such file or directory du: cannot access ‘./proc/4382/fdinfo/3': No such file or directory 0 ./proc 204M ./boot 12K ./redis 39M ./etc 16K ./lost+found 4.0K ./srv 0 ./sys 47G ./var 2.8G ./usr 4.0K ./mnt 36K ./tmp 0 ./dev 4.0K ./home 50G .

>>> cd var >>> du -h --max-depth=1

116M ./cache 8.0K ./empty 4.0K ./games 4.0K ./opt 24K ./db 46G ./lib 4.0K ./gopher 4.0K ./adm 4.0K ./crash 12K ./kerberos 4.0K ./preserve 4.0K ./nis 16K ./tmp 4.0K ./yp 4.0K ./local 104K ./spool 374M ./log 47G .

>>> cd lib >>> du -h --max-depth=1

76K ./systemd 24K ./NetworkManager 4.0K ./tuned 4.0K ./games 248K ./cloud 215M ./rpm 8.0K ./plymouth 46G ./docker 248K ./containerd 4.0K ./dbus 4.0K ./initramfs 4.0K ./os-prober 8.0K ./rsyslog 24K ./alternatives 8.0K ./authconfig 12K ./stateless 4.0K ./misc 4.0K ./ntp 8.0K ./dhclient 4.0K ./selinux 8.0K ./chrony 4.0K ./rpm-state 12M ./yum 8.0K ./postfix 28K ./polkit-1 4.0K ./machines 8.0K ./logrotate 46G .

>>> cd docker >>> du -h --max-depth=1

84K ./network 108K ./buildkit 4.0K ./trust 4.0K ./runtimes 5.7M ./image 42G ./volumes 24K ./plugins 4.0K ./tmp 20K ./builder 180K ./containers 3.3G ./overlay2 4.0K ./swarm 46G .

>>> cd volume >>> du -h --max-depth=1

172K ./kudu_to_jdy_kudu_to_jdy 42G ./jdy_extensions_logs 748K ./bot_etl_bot_etl 42G .

原来是jdy_extensions_logs这个volume爆满导致的,后面就是找代码bug的过程了,略

后记:

其实docker提供了很多命令来对容器镜像进行管理,其中的docker system df就是一个比较有用的命令

# 显示docker文件系统使用情况 >>> docker system df

TYPE TOTAL ACTIVE SIZE RECLAIMABLE Images 7 3 2.146GB 693.2MB (32%) Containers 3 3 127.5kB 0B (0%) Local Volumes 3 3 1.511MB 0B (0%) Build Cache 0 0 0B 0B

# 显示空间使用情况 >>> docker system df -v

Images space usage: REPOSITORY TAG IMAGE ID CREATED SIZE SHARED SIZE UNIQUE SIZE CONTAINERS jdy_extension 1.7 e3be3be9664a 15 hours ago 627.6MB 73.86MB 553.7MB 1 <none> <none> 4b5825747ae9 19 hours ago 74.02MB 73.86MB 158.8kB 0 redis 6.2.1 f877e80bb9ef 2 weeks ago 105.3MB 0B 105.3MB 0 kudu_to_jdy 2.9 888b72288bca 2 weeks ago 538.1MB 73.86MB 464.3MB 1 jdy_to_db 1.9 c345c4e15c1a 7 months ago 587.7MB 73.86MB 513.9MB 0 bot_etl 2.3 020d41691ec7 7 months ago 508.6MB 73.86MB 434.8MB 1 ubuntu 20.04 adafef2e596e 8 months ago 73.86MB 73.86MB 0B 0 Containers space usage: CONTAINER ID IMAGE COMMAND LOCAL VOLUMES SIZE CREATED STATUS NAMES 011a83deceac jdy_extension:1.7 "supervisord -n -c /…" 1 17.8kB 15 hours ago Up 15 hours jdy_extension_1.7 712f65baea85 kudu_to_jdy:2.9 "supervisord -n -c /…" 1 36.9kB 2 weeks ago Up 45 hours kudu_to_jdy_2.9 a5fa673086c8 bot_etl:2.3 "supervisord -n -c /…" 1 72.8kB 7 months ago Up 45 hours bot_etl_2.3 Local Volumes space usage: VOLUME NAME LINKS SIZE jdy_extensions_logs 1 658.5kB kudu_to_jdy_kudu_to_jdy 1 128kB bot_etl_bot_etl 1 724kB Build cache usage: 0B CACHE ID CACHE TYPE SIZE CREATED LAST USED USAGE SHARED

# 删除所有没有在用的volume >>> docker volume rm $(docker volume ls -q)

补充:记一次docker磁盘占用100%情况

在执行git pull origin master的时候,抛出如下错误:

error: RPC failed; HTTP 500 curl 22 The requested URL returned error: 500

fatal: the remote end hung up unexpectedly

百度之后好多说是nginx文件大小限制,但是查看配置文件,大小限制已经设置到了500M,应该不是该原因造成的:

server

{

listen 80;

server_name localhost;

client_max_body_size 500M;

location / {

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://localhost:180;

}

}

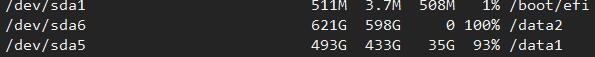

然后使用df -h查看磁盘情况,发现data2磁盘已经100%(docker存储在这里):

使用docker system prune -a释放了很多空间,问题解决。

注意:使用上述命令会删除一下内容

WARNING! This will remove:

- all stopped containers

- all networks not used by at least one container

- all images without at least one container associated to them

- all build cache

Are you sure you want to continue? [y/N] y

以上为个人经验,希望能给大家一个参考,也希望大家多多支持我们。如有错误或未考虑完全的地方,望不吝赐教。

赞 (0)