基于Spring Boot的线程池监控问题及解决方案

目录

- 前言

- 为什么需要对线程池进行监控

- 如何做线程池的监控

- 数据采集

- 数据存储以及大盘的展示

- 进一步扩展以及思考

- 如何合理配置线程池参数

- 如何动态调整线程池参数

- 如何给不同的服务之间做线程池的隔离

- 实现方案

前言

这篇是推动大家异步编程的思想的线程池的准备篇,要做好监控,让大家使用无后顾之忧,敬畏生产。

为什么需要对线程池进行监控

Java线程池作为最常使用到的并发工具,相信大家都不陌生,但是你真的确定使用对了吗?大名鼎鼎的阿里Java代码规范要求我们不使用 Executors来快速创建线程池,但是抛弃Executors,使用其它方式创建线程池就一定不会出现问题吗?本质上对于我们来说线程池本身的运行过程是一个黑盒,我们没办法了解线程池中的运行状态时,出现问题没有办法及时判断和预警。面对这种黑盒操作必须通过监控方式让其透明化,这样对我们来说才能更好的使用好线程池。因此必须对线程池做监控。

如何做线程池的监控

对于如何做监控,本质就是涉及三点,分别是数据采集、数据存储以及大盘的展示,接下来我们分说下这三点;

数据采集

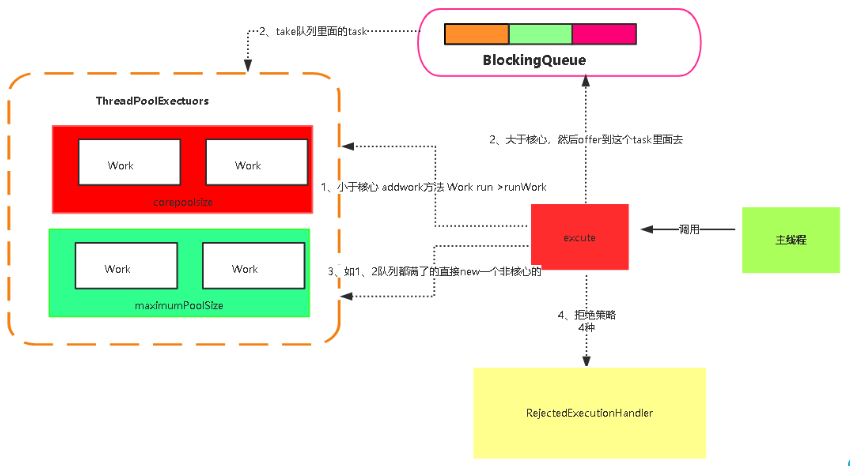

采集什么数据,对于我们来说需要采集就是黑盒的数据,什么又是线程池的黑盒数据,其实也就是整个线程处理的整个流程,在整个流程中,我们可以通过ThreadPoolExecutor中的七个方法获取数据,通过这七个方法采集到的数据就可以使线程池的执行过程透明化。

- getCorePoolSize():获取核心线程数;

- getMaximumPoolSize:获取最大线程数;

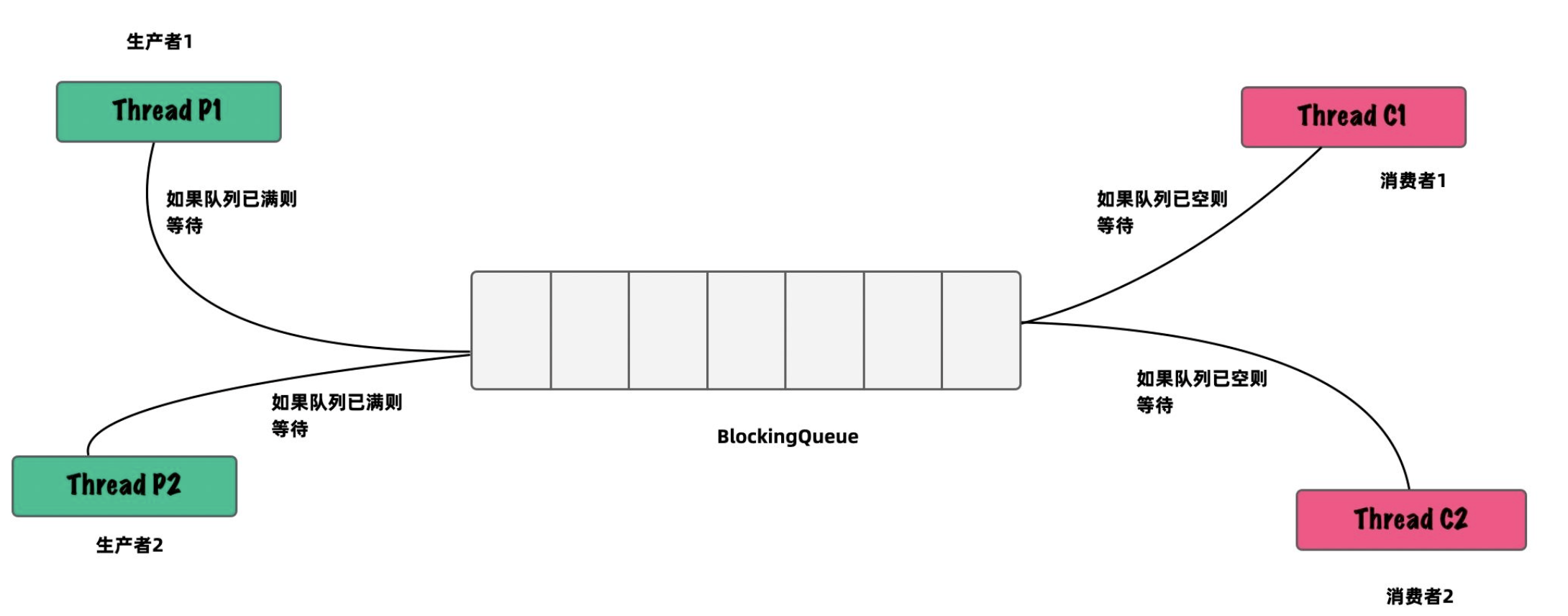

- getQueue():获取线程池中的阻塞队列,并通过阻塞队列中的方法获取队列长度、元素个数等;

- getPoolSize():获取线程池中的工作线程数(包括核心线程和非核心线程);

- getActiveCount():获取活跃线程数,也就是正在执行任务的线程;

- getLargestPoolSize():获取线程池曾经到过的最大工作线程数;

- getTaskCount():获取历史已完成以及正在执行的总的任务数量;

除了我们了解的这些流程以外,ThreadPoolExecutor中还提供了三种钩子函数,

- beforeExecute():Worker线程执行任务之前会调用的方法;

- afterExecute():在Worker线程执行任务之后会调用的方法;

- terminated():当线程池从运行状态变更到TERMINATED状态之前调用的方法;

对于beforeExecute和afterExecute可以理解为使用Aop监听线程执行的时间,这样子我们可以对每个线程运行的时间整体做监控,terminated可以理解为线程关闭时候的监控,这样我们就可以整体获取采集到线程池生命周期的所有数据了。

数据存储以及大盘的展示

对于存储我们这个比较适合采用时序性数据库,此外现在很多成熟的监控产品都可以满足我们大屏展示的诉求,这里推荐下美团Cat和Prometheus,这里不展开进行讲解,大家可以根据自己公司的监控产品进行选择,对于不同的方案采取的存储形式会有些差异,甚至自己都可以自定义实现一个功能,反正难度不大。

进一步扩展以及思考

在实际的项目开发中我们会遇到以下场景:

- 不同的业务采用同一个线程池,这样如果某个服务阻塞,会影响到整体共用线程池的所有服务,会触发线程池的拒绝策略;

- 流量突然增加,需要动态调整线程池的参数,这个时候又不能重启;

针对这两种场景,我们对线程池再次进行了深入的思考:

- 如何合理配置线程池参数;

- 如何动态调整线程池参数;

- 如何给不同的服务之间做线程池的隔离;

如何合理配置线程池参数

关于这个问题面试的时候也是经常被问到,我只能说这个问题开始就是一个坑,针对与CPU密集型和I/O密集型,线程池的参数是有不同设计的,也不是遵守几个公式就可以搞定,当然可以参考,我认为对于线程池合理的参数的配置是经过多次调整得到的,甚至增加和减少业务都会影响一些参数,我不太建议大家每天背书式的CPU密集型就是N+1,非CPU密集型就是2N,因此我们更希望看到线程池动态配置。

如何动态调整线程池参数

关于如何动态调整线程池,还是回到我们场景问题的解决上,对于流量突增核心就是提升线程池的处理速度,那如何提升线程池的处理速度,有两种方式,一种是加快业务的处理,也就是消费的快,显然这种在运行的业务中我们想改变还是比较困难,这个可以作为复盘的重点;还有一种就是增加消费者,增加消费者的重点就是调整核心线程数以及非核心线程数的数量。

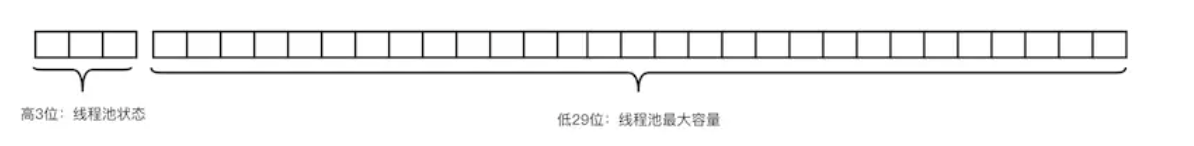

居于这种思考,这个时候我们需要看下ThreadPoolExecutor线程池源码,首先看下开始定义的变量,通过变量的设计我们就会发现大师就是大师,大师通过AtomicInteger修饰的ctl变量,高3位存储了线程池的状态,低29存储线程的个数,通过一个变量完成两件事情,完成状态判断以及限制线程最大个数。使用一个HashSet存储Worker的引用,而Worker继承了AbstractQueuedSynchronizer,实现一个一个不可冲入的独占锁保证线程的安全性。

//用来标记线程池状态(高3位),线程个数(低29位) private final AtomicInteger ctl = new AtomicInteger(ctlOf(RUNNING, 0)); //工作状态存储在高3位中 private static final int COUNT_BITS = Integer.SIZE - 3; //线程个数所能表达的最大数值 private static final int CAPACITY = (1 << COUNT_BITS) - 1; //线程池状态 //RUNNING -1 能够接收新任务,也可以处理阻塞队列中的任务 private static final int RUNNING = -1 << COUNT_BITS; //SHUTDOWN 0 不可以接受新任务,继续处理阻塞队列中的任务 private static final int SHUTDOWN = 0 << COUNT_BITS; //STOP 1 不接收新任务,不处理阻塞队列中的任务,并且会中断正在处理的任务 private static final int STOP = 1 << COUNT_BITS; //TIDYING 2 所有任务已经中止,且工作线程数量为0,最后变迁到这个状态的线程将要执行terminated()钩子方法,只会有一个线程执行这个方法; private static final int TIDYING = 2 << COUNT_BITS; //TERMINATED 3 中止状态,已经执行完terminated()钩子方法 private static final int TERMINATED = 3 << COUNT_BITS; //任务队列,当线程池中的线程达到核心线程数量时,再提交任务 就会直接提交到 workQueue private final BlockingQueue<Runnable> workQueue; //线程池全局锁,增加worker减少worker时需要持有mainLock,修改线程池运行状态时,也需要 private final ReentrantLock mainLock = new ReentrantLock(); //线程池中真正存放worker的地方。 private final HashSet<Worker> workers = new HashSet<Worker>(); private final Condition termination = mainLock.newCondition(); //记录线程池生命周期内 线程数最大值 private int largestPoolSize; //记录线程池所完成任务总数 private long completedTaskCount; //创建线程会使用线程工厂 private volatile ThreadFactory threadFactory; //拒绝策略 private volatile RejectedExecutionHandler handler; //存活时间 private volatile long keepAliveTime; //控制核心线程数量内的线程 是否可以被回收。true 可以,false不可以。 private volatile boolean allowCoreThreadTimeOut; //核心线程池数量 private volatile int corePoolSize; //线程池最大数量 private volatile int maximumPoolSize;

我们的重点看的是volatile修饰的corePoolSize、maximumPoolSize以及keepAliveTime,当然threadFactory和handler也可以看下,不过这两个不是我们解决动态调整线程池的关键。对于这些volatile修饰的关键的变量,从并发角度思考的,必然是有并发读写的操作才使用volatile修饰的,在指标采集中我们看到其get的方法,对于写的操作我们可以猜测肯定提供了set的方式,这个时候我们可以搜索下setCorePoolSize,果不其然我们真的搜索到了。

public void setCorePoolSize(int corePoolSize) {

if (corePoolSize < 0)

throw new IllegalArgumentException();

int delta = corePoolSize - this.corePoolSize;

this.corePoolSize = corePoolSize;

//新设置的corePoolSize小于当前核心线程数的时候

//会调用interruptIdleWorkers方法来中断空闲的工作线程

if (workerCountOf(ctl.get()) > corePoolSize)

interruptIdleWorkers();

else if (delta > 0) {

//当设置的值大于当前值的时候核心线程数的时候

//按照等待队列中的任务数量来创建新的工作线程

int k = Math.min(delta, workQueue.size());

while (k-- > 0 && addWorker(null, true)) {

if (workQueue.isEmpty())

break;

}

}

}

接下来我们看下interruptIdleWorkers的源码,此处源码使用ReentrantLock可重入锁,因为Worker的是通过一个全局的HashSer存储,这里通过ReentrantLock保证线程安全。

private void interruptIdleWorkers(boolean onlyOne) {

//可重入锁

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

for (Worker w : workers) {

Thread t = w.thread;

if (!t.isInterrupted() && w.tryLock()) {

try {

//中断当前线程

t.interrupt();

} catch (SecurityException ignore) {

} finally {

w.unlock();

}

}

if (onlyOne)

break;

}

} finally {

mainLock.unlock();

}

}

接下来我们在验证一下是否存在其他相关的参数设置,如下:

public void setMaximumPoolSize(int maximumPoolSize) {

if (maximumPoolSize <= 0 || maximumPoolSize < corePoolSize)

throw new IllegalArgumentException();

this.maximumPoolSize = maximumPoolSize;

if (workerCountOf(ctl.get()) > maximumPoolSize)

interruptIdleWorkers();

}

public void setKeepAliveTime(long time, TimeUnit unit) {

if (time < 0)

throw new IllegalArgumentException();

if (time == 0 && allowsCoreThreadTimeOut())

throw new IllegalArgumentException("Core threads must have nonzero keep alive times");

long keepAliveTime = unit.toNanos(time);

long delta = keepAliveTime - this.keepAliveTime;

this.keepAliveTime = keepAliveTime;

if (delta < 0)

interruptIdleWorkers();

}

public void setRejectedExecutionHandler(RejectedExecutionHandler handler) {

if (handler == null)

throw new NullPointerException();

this.handler = handler;

}

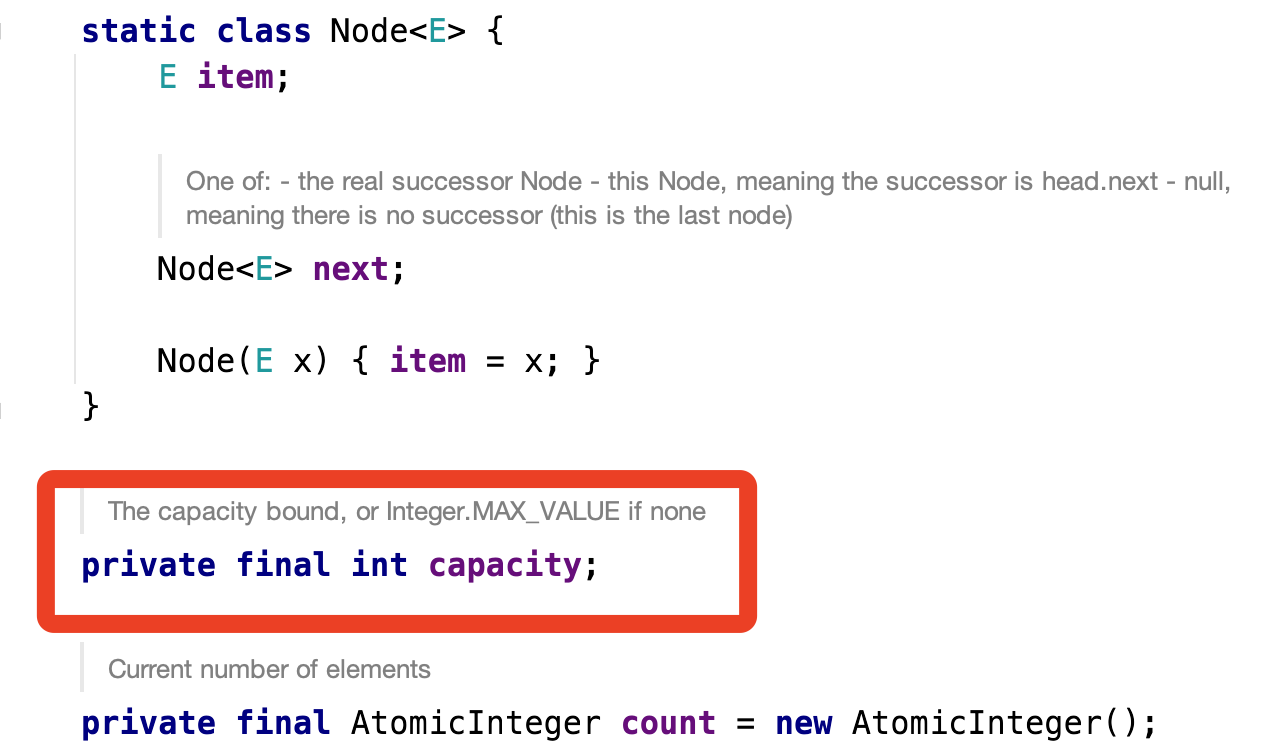

这里我们会发现一个问题BlockingQueue的队列容量不能修改,看到美团的文章提供的一个可修改的队列ResizableCapacityLinkedBlockingQueue,于是乎去看了一下LinkedBlockingQueue的源码,发现了关于capacity是一个final修饰的,这个时候我就思考一番,这个地方采用volatile修饰,对外暴露可修改,这样就实现了动态修改阻塞队列的大小。

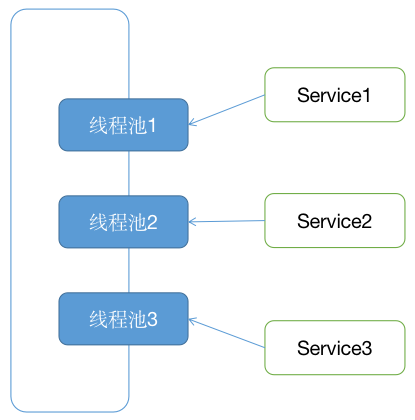

如何给不同的服务之间做线程池的隔离

关于如何给不同服务之间做线程池的隔离,这里我们可以采用Hystrix的舱壁模式,也就是说针对不同服务类型的服务单独创建线程池,这样就可以实现服务之间不相互影响,不会因为某个服务导致整体的服务影响都阻塞。

实现方案

聊了这么多前置的知识储备,接下来我们来聊聊实现方案,整体的实现方案我们建立在Spring Boot的基础实现,采用Spring Cloud刷新动态配置,采用该方式比较合适单体应用,对于有Appllo和Nacos可以通过监听配置方式的来动态刷新。

- Maven依赖如下;

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-context</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <version>1.18.12</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.5</version> </dependency> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-core</artifactId> <version>1.2.3</version> </dependency> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-classic</artifactId> <version>1.2.3</version> </dependency> </dependencies> <dependencyManagement> <dependencies> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>Hoxton.SR7</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement>

- 配置信息如下:

monitor.threadpool.executors[0].thread-pool-name=first-monitor-thread-pool

monitor.threadpool.executors[0].core-pool-size=4

monitor.threadpool.executors[0].max-pool-size=8

monitor.threadpool.executors[0].queue-capacity=100

monitor.threadpool.executors[1].thread-pool-name=second-monitor-thread-pool

monitor.threadpool.executors[1].core-pool-size=2

monitor.threadpool.executors[1].max-pool-size=4

monitor.threadpool.executors[1].queue-capacity=40

/**

* 线程池配置

*

* @author wangtongzhou

* @since 2022-03-11 21:41

*/

@Data

public class ThreadPoolProperties {

/**

* 线程池名称

*/

private String threadPoolName;

* 核心线程数

private Integer corePoolSize = Runtime.getRuntime().availableProcessors();

* 最大线程数

private Integer maxPoolSize;

* 队列最大数量

private Integer queueCapacity;

* 拒绝策略

private String rejectedExecutionType = "AbortPolicy";

* 空闲线程存活时间

private Long keepAliveTime = 1L;

* 空闲线程存活时间单位

private TimeUnit unit = TimeUnit.MILLISECONDS;

}

* 动态刷新线程池配置

* @since 2022-03-13 14:09

@ConfigurationProperties(prefix = "monitor.threadpool")

@Component

public class DynamicThreadPoolProperties {

private List<ThreadPoolProperties> executors;

- 自定可修改阻塞队列大小的方式如下:

/**

* 可重新设定队列大小的阻塞队列

*

* @author wangtongzhou

* @since 2022-03-13 11:54

*/

public class ResizableCapacityLinkedBlockingQueue<E> extends AbstractQueue<E>

implements BlockingDeque<E>, java.io.Serializable {

/*

* Implemented as a simple doubly-linked list protected by a

* single lock and using conditions to manage blocking.

*

* To implement weakly consistent iterators, it appears we need to

* keep all Nodes GC-reachable from a predecessor dequeued Node.

* That would cause two problems:

* - allow a rogue Iterator to cause unbounded memory retention

* - cause cross-generational linking of old Nodes to new Nodes if

* a Node was tenured while live, which generational GCs have a

* hard time dealing with, causing repeated major collections.

* However, only non-deleted Nodes need to be reachable from

* dequeued Nodes, and reachability does not necessarily have to

* be of the kind understood by the GC. We use the trick of

* linking a Node that has just been dequeued to itself. Such a

* self-link implicitly means to jump to "first" (for next links)

* or "last" (for prev links).

*/

/*

* We have "diamond" multiple interface/abstract class inheritance

* here, and that introduces ambiguities. Often we want the

* BlockingDeque javadoc combined with the AbstractQueue

* implementation, so a lot of method specs are duplicated here.

*/

private static final long serialVersionUID = -387911632671998426L;

/**

* Doubly-linked list node class

*/

static final class Node<E> {

/**

* The item, or null if this node has been removed.

*/

E item;

/**

* One of:

* - the real predecessor Node

* - this Node, meaning the predecessor is tail

* - null, meaning there is no predecessor

*/

Node<E> prev;

/**

* One of:

* - the real successor Node

* - this Node, meaning the successor is head

* - null, meaning there is no successor

*/

Node<E> next;

Node(E x) {

item = x;

}

}

/**

* Pointer to first node.

* Invariant: (first == null && last == null) ||

* (first.prev == null && first.item != null)

*/

transient Node<E> first;

/**

* Pointer to last node.

* Invariant: (first == null && last == null) ||

* (last.next == null && last.item != null)

*/

transient Node<E> last;

/**

* Number of items in the deque

*/

private transient int count;

/**

* Maximum number of items in the deque

*/

private volatile int capacity;

public int getCapacity() {

return capacity;

}

public void setCapacity(int capacity) {

this.capacity = capacity;

}

/**

* Main lock guarding all access

*/

final ReentrantLock lock = new ReentrantLock();

/**

* Condition for waiting takes

*/

private final Condition notEmpty = lock.newCondition();

/**

* Condition for waiting puts

*/

private final Condition notFull = lock.newCondition();

/**

* Creates a {@code ResizableCapacityLinkedBlockIngQueue} with a capacity of

* {@link Integer#MAX_VALUE}.

*/

public ResizableCapacityLinkedBlockingQueue() {

this(Integer.MAX_VALUE);

}

/**

* Creates a {@code ResizableCapacityLinkedBlockIngQueue} with the given (fixed) capacity.

*

* @param capacity the capacity of this deque

* @throws IllegalArgumentException if {@code capacity} is less than 1

*/

public ResizableCapacityLinkedBlockingQueue(int capacity) {

if (capacity <= 0) {

throw new IllegalArgumentException();

}

this.capacity = capacity;

}

/**

* Creates a {@code ResizableCapacityLinkedBlockIngQueue} with a capacity of

* {@link Integer#MAX_VALUE}, initially containing the elements of

* the given collection, added in traversal order of the

* collection's iterator.

*

* @param c the collection of elements to initially contain

* @throws NullPointerException if the specified collection or any

* of its elements are null

*/

public ResizableCapacityLinkedBlockingQueue(Collection<? extends E> c) {

this(Integer.MAX_VALUE);

final ReentrantLock lock = this.lock;

lock.lock(); // Never contended, but necessary for visibility

try {

for (E e : c) {

if (e == null) {

throw new NullPointerException();

}

if (!linkLast(new Node<E>(e))) {

throw new IllegalStateException("Deque full");

}

}

} finally {

lock.unlock();

}

}

// Basic linking and unlinking operations, called only while holding lock

/**

* Links node as first element, or returns false if full.

*/

private boolean linkFirst(Node<E> node) {

// assert lock.isHeldByCurrentThread();

if (count >= capacity) {

return false;

}

Node<E> f = first;

node.next = f;

first = node;

if (last == null) {

last = node;

} else {

f.prev = node;

}

++count;

notEmpty.signal();

return true;

}

/**

* Links node as last element, or returns false if full.

*/

private boolean linkLast(Node<E> node) {

// assert lock.isHeldByCurrentThread();

if (count >= capacity) {

return false;

}

Node<E> l = last;

node.prev = l;

last = node;

if (first == null) {

first = node;

} else {

l.next = node;

}

++count;

notEmpty.signal();

return true;

}

/**

* Removes and returns first element, or null if empty.

*/

private E unlinkFirst() {

// assert lock.isHeldByCurrentThread();

Node<E> f = first;

if (f == null) {

return null;

}

Node<E> n = f.next;

E item = f.item;

f.item = null;

f.next = f; // help GC

first = n;

if (n == null) {

last = null;

} else {

n.prev = null;

}

--count;

notFull.signal();

return item;

}

/**

* Removes and returns last element, or null if empty.

*/

private E unlinkLast() {

// assert lock.isHeldByCurrentThread();

Node<E> l = last;

if (l == null) {

return null;

}

Node<E> p = l.prev;

E item = l.item;

l.item = null;

l.prev = l; // help GC

last = p;

if (p == null) {

first = null;

} else {

p.next = null;

}

--count;

notFull.signal();

return item;

}

/**

* Unlinks x.

*/

void unlink(Node<E> x) {

// assert lock.isHeldByCurrentThread();

Node<E> p = x.prev;

Node<E> n = x.next;

if (p == null) {

unlinkFirst();

} else if (n == null) {

unlinkLast();

} else {

p.next = n;

n.prev = p;

x.item = null;

// Don't mess with x's links. They may still be in use by

// an iterator.

--count;

notFull.signal();

}

}

// BlockingDeque methods

/**

* @throws IllegalStateException if this deque is full

* @throws NullPointerException {@inheritDoc}

*/

@Override

public void addFirst(E e) {

if (!offerFirst(e)) {

throw new IllegalStateException("Deque full");

}

}

/**

* @throws IllegalStateException if this deque is full

* @throws NullPointerException {@inheritDoc}

*/

@Override

public void addLast(E e) {

if (!offerLast(e)) {

throw new IllegalStateException("Deque full");

}

}

/**

* @throws NullPointerException {@inheritDoc}

*/

@Override

public boolean offerFirst(E e) {

if (e == null) {

throw new NullPointerException();

}

Node<E> node = new Node<E>(e);

final ReentrantLock lock = this.lock;

lock.lock();

try {

return linkFirst(node);

} finally {

lock.unlock();

}

}

/**

* @throws NullPointerException {@inheritDoc}

*/

@Override

public boolean offerLast(E e) {

if (e == null) throw new NullPointerException();

Node<E> node = new Node<E>(e);

final ReentrantLock lock = this.lock;

lock.lock();

try {

return linkLast(node);

} finally {

lock.unlock();

}

}

/**

* @throws NullPointerException {@inheritDoc}

* @throws InterruptedException {@inheritDoc}

*/

@Override

public void putFirst(E e) throws InterruptedException {

if (e == null) {

throw new NullPointerException();

}

Node<E> node = new Node<E>(e);

final ReentrantLock lock = this.lock;

lock.lock();

try {

while (!linkFirst(node)) {

notFull.await();

}

} finally {

lock.unlock();

}

}

/**

* @throws NullPointerException {@inheritDoc}

* @throws InterruptedException {@inheritDoc}

*/

@Override

public void putLast(E e) throws InterruptedException {

if (e == null) {

throw new NullPointerException();

}

Node<E> node = new Node<E>(e);

final ReentrantLock lock = this.lock;

lock.lock();

try {

while (!linkLast(node)) {

notFull.await();

}

} finally {

lock.unlock();

}

}

/**

* @throws NullPointerException {@inheritDoc}

* @throws InterruptedException {@inheritDoc}

*/

@Override

public boolean offerFirst(E e, long timeout, TimeUnit unit)

throws InterruptedException {

if (e == null) {

throw new NullPointerException();

}

Node<E> node = new Node<E>(e);

long nanos = unit.toNanos(timeout);

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

while (!linkFirst(node)) {

if (nanos <= 0) {

return false;

}

nanos = notFull.awaitNanos(nanos);

}

return true;

} finally {

lock.unlock();

}

}

/**

* @throws NullPointerException {@inheritDoc}

* @throws InterruptedException {@inheritDoc}

*/

@Override

public boolean offerLast(E e, long timeout, TimeUnit unit)

throws InterruptedException {

if (e == null) throw new NullPointerException();

Node<E> node = new Node<E>(e);

long nanos = unit.toNanos(timeout);

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

while (!linkLast(node)) {

if (nanos <= 0) {

return false;

}

nanos = notFull.awaitNanos(nanos);

}

return true;

} finally {

lock.unlock();

}

}

/**

* @throws NoSuchElementException {@inheritDoc}

*/

@Override

public E removeFirst() {

E x = pollFirst();

if (x == null) {

throw new NoSuchElementException();

}

return x;

}

/**

* @throws NoSuchElementException {@inheritDoc}

*/

@Override

public E removeLast() {

E x = pollLast();

if (x == null) {

throw new NoSuchElementException();

}

return x;

}

@Override

public E pollFirst() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return unlinkFirst();

} finally {

lock.unlock();

}

}

@Override

public E pollLast() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return unlinkLast();

} finally {

lock.unlock();

}

}

@Override

public E takeFirst() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lock();

try {

E x;

while ((x = unlinkFirst()) == null) {

notEmpty.await();

}

return x;

} finally {

lock.unlock();

}

}

@Override

public E takeLast() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lock();

try {

E x;

while ((x = unlinkLast()) == null) {

notEmpty.await();

}

return x;

} finally {

lock.unlock();

}

}

@Override

public E pollFirst(long timeout, TimeUnit unit)

throws InterruptedException {

long nanos = unit.toNanos(timeout);

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

E x;

while ((x = unlinkFirst()) == null) {

if (nanos <= 0) {

return null;

}

nanos = notEmpty.awaitNanos(nanos);

}

return x;

} finally {

lock.unlock();

}

}

@Override

public E pollLast(long timeout, TimeUnit unit)

throws InterruptedException {

long nanos = unit.toNanos(timeout);

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

E x;

while ((x = unlinkLast()) == null) {

if (nanos <= 0) {

return null;

}

nanos = notEmpty.awaitNanos(nanos);

}

return x;

} finally {

lock.unlock();

}

}

/**

* @throws NoSuchElementException {@inheritDoc}

*/

@Override

public E getFirst() {

E x = peekFirst();

if (x == null) {

throw new NoSuchElementException();

}

return x;

}

/**

* @throws NoSuchElementException {@inheritDoc}

*/

@Override

public E getLast() {

E x = peekLast();

if (x == null) {

throw new NoSuchElementException();

}

return x;

}

@Override

public E peekFirst() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return (first == null) ? null : first.item;

} finally {

lock.unlock();

}

}

@Override

public E peekLast() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return (last == null) ? null : last.item;

} finally {

lock.unlock();

}

}

@Override

public boolean removeFirstOccurrence(Object o) {

if (o == null) {

return false;

}

final ReentrantLock lock = this.lock;

lock.lock();

try {

for (Node<E> p = first; p != null; p = p.next) {

if (o.equals(p.item)) {

unlink(p);

return true;

}

}

return false;

} finally {

lock.unlock();

}

}

@Override

public boolean removeLastOccurrence(Object o) {

if (o == null) {

return false;

}

final ReentrantLock lock = this.lock;

lock.lock();

try {

for (Node<E> p = last; p != null; p = p.prev) {

if (o.equals(p.item)) {

unlink(p);

return true;

}

}

return false;

} finally {

lock.unlock();

}

}

// BlockingQueue methods

/**

* Inserts the specified element at the end of this deque unless it would

* violate capacity restrictions. When using a capacity-restricted deque,

* it is generally preferable to use method {@link #offer(Object) offer}.

*

* <p>This method is equivalent to {@link #addLast}.

*

* @throws IllegalStateException if this deque is full

* @throws NullPointerException if the specified element is null

*/

@Override

public boolean add(E e) {

addLast(e);

return true;

}

/**

* @throws NullPointerException if the specified element is null

*/

@Override

public boolean offer(E e) {

return offerLast(e);

}

/**

* @throws NullPointerException {@inheritDoc}

* @throws InterruptedException {@inheritDoc}

*/

@Override

public void put(E e) throws InterruptedException {

putLast(e);

}

/**

* @throws NullPointerException {@inheritDoc}

* @throws InterruptedException {@inheritDoc}

*/

@Override

public boolean offer(E e, long timeout, TimeUnit unit)

throws InterruptedException {

return offerLast(e, timeout, unit);

}

/**

* Retrieves and removes the head of the queue represented by this deque.

* This method differs from {@link #poll poll} only in that it throws an

* exception if this deque is empty.

*

* <p>This method is equivalent to {@link #removeFirst() removeFirst}.

*

* @return the head of the queue represented by this deque

* @throws NoSuchElementException if this deque is empty

*/

@Override

public E remove() {

return removeFirst();

}

@Override

public E poll() {

return pollFirst();

}

@Override

public E take() throws InterruptedException {

return takeFirst();

}

@Override

public E poll(long timeout, TimeUnit unit) throws InterruptedException {

return pollFirst(timeout, unit);

}

/**

* Retrieves, but does not remove, the head of the queue represented by

* this deque. This method differs from {@link #peek peek} only in that

* it throws an exception if this deque is empty.

*

* <p>This method is equivalent to {@link #getFirst() getFirst}.

*

* @return the head of the queue represented by this deque

* @throws NoSuchElementException if this deque is empty

*/

@Override

public E element() {

return getFirst();

}

@Override

public E peek() {

return peekFirst();

}

/**

* Returns the number of additional elements that this deque can ideally

* (in the absence of memory or resource constraints) accept without

* blocking. This is always equal to the initial capacity of this deque

* less the current {@code size} of this deque.

*

* <p>Note that you <em>cannot</em> always tell if an attempt to insert

* an element will succeed by inspecting {@code remainingCapacity}

* because it may be the case that another thread is about to

* insert or remove an element.

*/

@Override

public int remainingCapacity() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return capacity - count;

} finally {

lock.unlock();

}

}

/**

* @throws UnsupportedOperationException {@inheritDoc}

* @throws ClassCastException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

* @throws IllegalArgumentException {@inheritDoc}

*/

@Override

public int drainTo(Collection<? super E> c) {

return drainTo(c, Integer.MAX_VALUE);

}

/**

* @throws UnsupportedOperationException {@inheritDoc}

* @throws ClassCastException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

* @throws IllegalArgumentException {@inheritDoc}

*/

@Override

public int drainTo(Collection<? super E> c, int maxElements) {

if (c == null) {

throw new NullPointerException();

}

if (c == this) {

throw new IllegalArgumentException();

}

if (maxElements <= 0) {

return 0;

}

final ReentrantLock lock = this.lock;

lock.lock();

try {

int n = Math.min(maxElements, count);

for (int i = 0; i < n; i++) {

c.add(first.item); // In this order, in case add() throws.

unlinkFirst();

}

return n;

} finally {

lock.unlock();

}

}

// Stack methods

/**

* @throws IllegalStateException if this deque is full

* @throws NullPointerException {@inheritDoc}

*/

@Override

public void push(E e) {

addFirst(e);

}

/**

* @throws NoSuchElementException {@inheritDoc}

*/

@Override

public E pop() {

return removeFirst();

}

// Collection methods

/**

* Removes the first occurrence of the specified element from this deque.

* If the deque does not contain the element, it is unchanged.

* More formally, removes the first element {@code e} such that

* {@code o.equals(e)} (if such an element exists).

* Returns {@code true} if this deque contained the specified element

* (or equivalently, if this deque changed as a result of the call).

*

* <p>This method is equivalent to

* {@link #removeFirstOccurrence(Object) removeFirstOccurrence}.

*

* @param o element to be removed from this deque, if present

* @return {@code true} if this deque changed as a result of the call

*/

@Override

public boolean remove(Object o) {

return removeFirstOccurrence(o);

}

/**

* Returns the number of elements in this deque.

*

* @return the number of elements in this deque

*/

@Override

public int size() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return count;

} finally {

lock.unlock();

}

}

/**

* Returns {@code true} if this deque contains the specified element.

* More formally, returns {@code true} if and only if this deque contains

* at least one element {@code e} such that {@code o.equals(e)}.

*

* @param o object to be checked for containment in this deque

* @return {@code true} if this deque contains the specified element

*/

@Override

public boolean contains(Object o) {

if (o == null) {

return false;

}

final ReentrantLock lock = this.lock;

lock.lock();

try {

for (Node<E> p = first; p != null; p = p.next) {

if (o.equals(p.item)) {

return true;

}

}

return false;

} finally {

lock.unlock();

}

}

/*

* TODO: Add support for more efficient bulk operations.

*

* We don't want to acquire the lock for every iteration, but we

* also want other threads a chance to interact with the

* collection, especially when count is close to capacity.

*/

// /**

// * Adds all of the elements in the specified collection to this

// * queue. Attempts to addAll of a queue to itself result in

// * {@code IllegalArgumentException}. Further, the behavior of

// * this operation is undefined if the specified collection is

// * modified while the operation is in progress.

// *

// * @param c collection containing elements to be added to this queue

// * @return {@code true} if this queue changed as a result of the call

// * @throws ClassCastException {@inheritDoc}

// * @throws NullPointerException {@inheritDoc}

// * @throws IllegalArgumentException {@inheritDoc}

// * @throws IllegalStateException if this deque is full

// * @see #add(Object)

// */

// public boolean addAll(Collection<? extends E> c) {

// if (c == null)

// throw new NullPointerException();

// if (c == this)

// throw new IllegalArgumentException();

// final ReentrantLock lock = this.lock;

// lock.lock();

// try {

// boolean modified = false;

// for (E e : c)

// if (linkLast(e))

// modified = true;

// return modified;

// } finally {

// lock.unlock();

// }

// }

/**

* Returns an array containing all of the elements in this deque, in

* proper sequence (from first to last element).

*

* <p>The returned array will be "safe" in that no references to it are

* maintained by this deque. (In other words, this method must allocate

* a new array). The caller is thus free to modify the returned array.

*

* <p>This method acts as bridge between array-based and collection-based

* APIs.

*

* @return an array containing all of the elements in this deque

*/

@Override

@SuppressWarnings("unchecked")

public Object[] toArray() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

Object[] a = new Object[count];

int k = 0;

for (Node<E> p = first; p != null; p = p.next) {

a[k++] = p.item;

}

return a;

} finally {

lock.unlock();

}

}

/**

* Returns an array containing all of the elements in this deque, in

* proper sequence; the runtime type of the returned array is that of

* the specified array. If the deque fits in the specified array, it

* is returned therein. Otherwise, a new array is allocated with the

* runtime type of the specified array and the size of this deque.

*

* <p>If this deque fits in the specified array with room to spare

* (i.e., the array has more elements than this deque), the element in

* the array immediately following the end of the deque is set to

* {@code null}.

*

* <p>Like the {@link #toArray()} method, this method acts as bridge between

* array-based and collection-based APIs. Further, this method allows

* precise control over the runtime type of the output array, and may,

* under certain circumstances, be used to save allocation costs.

*

* <p>Suppose {@code x} is a deque known to contain only strings.

* The following code can be used to dump the deque into a newly

* allocated array of {@code String}:

*

* <pre> {@code String[] y = x.toArray(new String[0]);}</pre>

* <p>

* Note that {@code toArray(new Object[0])} is identical in function to

* {@code toArray()}.

*

* @param a the array into which the elements of the deque are to

* be stored, if it is big enough; otherwise, a new array of the

* same runtime type is allocated for this purpose

* @return an array containing all of the elements in this deque

* @throws ArrayStoreException if the runtime type of the specified array

* is not a supertype of the runtime type of every element in

* this deque

* @throws NullPointerException if the specified array is null

*/

@Override

@SuppressWarnings("unchecked")

public <T> T[] toArray(T[] a) {

final ReentrantLock lock = this.lock;

lock.lock();

try {

if (a.length < count) {

a = (T[]) java.lang.reflect.Array.newInstance

(a.getClass().getComponentType(), count);

}

int k = 0;

for (Node<E> p = first; p != null; p = p.next) {

a[k++] = (T) p.item;

}

if (a.length > k) {

a[k] = null;

}

return a;

} finally {

lock.unlock();

}

}

@Override

public String toString() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

Node<E> p = first;

if (p == null) {

return "[]";

}

StringBuilder sb = new StringBuilder();

sb.append('[');

for (; ; ) {

E e = p.item;

sb.append(e == this ? "(this Collection)" : e);

p = p.next;

if (p == null) {

return sb.append(']').toString();

}

sb.append(',').append(' ');

}

} finally {

lock.unlock();

}

}

/**

* Atomically removes all of the elements from this deque.

* The deque will be empty after this call returns.

*/

@Override

public void clear() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

for (Node<E> f = first; f != null; ) {

f.item = null;

Node<E> n = f.next;

f.prev = null;

f.next = null;

f = n;

}

first = last = null;

count = 0;

notFull.signalAll();

} finally {

lock.unlock();

}

}

/**

* Returns an iterator over the elements in this deque in proper sequence.

* The elements will be returned in order from first (head) to last (tail).

*

* <p>The returned iterator is

* <a href="package-summary.html#Weakly" rel="external nofollow" rel="external nofollow" rel="external nofollow" ><i>weakly consistent</i></a>.

*

* @return an iterator over the elements in this deque in proper sequence

*/

@Override

public Iterator<E> iterator() {

return new Itr();

}

/**

* Returns an iterator over the elements in this deque in reverse

* sequential order. The elements will be returned in order from

* last (tail) to first (head).

*

* <p>The returned iterator is

* <a href="package-summary.html#Weakly" rel="external nofollow" rel="external nofollow" rel="external nofollow" ><i>weakly consistent</i></a>.

*

* @return an iterator over the elements in this deque in reverse order

*/

@Override

public Iterator<E> descendingIterator() {

return new DescendingItr();

}

/**

* Base class for Iterators for ResizableCapacityLinkedBlockIngQueue

*/

private abstract class AbstractItr implements Iterator<E> {

/**

* The next node to return in next()

*/

Node<E> next;

/**

* nextItem holds on to item fields because once we claim that

* an element exists in hasNext(), we must return item read

* under lock (in advance()) even if it was in the process of

* being removed when hasNext() was called.

*/

E nextItem;

/**

* Node returned by most recent call to next. Needed by remove.

* Reset to null if this element is deleted by a call to remove.

*/

private Node<E> lastRet;

abstract Node<E> firstNode();

abstract Node<E> nextNode(Node<E> n);

AbstractItr() {

// set to initial position

final ReentrantLock lock = ResizableCapacityLinkedBlockingQueue.this.lock;

lock.lock();

try {

next = firstNode();

nextItem = (next == null) ? null : next.item;

} finally {

lock.unlock();

}

}

/**

* Returns the successor node of the given non-null, but

* possibly previously deleted, node.

*/

private Node<E> succ(Node<E> n) {

// Chains of deleted nodes ending in null or self-links

// are possible if multiple interior nodes are removed.

for (; ; ) {

Node<E> s = nextNode(n);

if (s == null) {

return null;

} else if (s.item != null) {

return s;

} else if (s == n) {

return firstNode();

} else {

n = s;

}

}

}

/**

* Advances next.

*/

void advance() {

final ReentrantLock lock = ResizableCapacityLinkedBlockingQueue.this.lock;

lock.lock();

try {

// assert next != null;

next = succ(next);

nextItem = (next == null) ? null : next.item;

} finally {

lock.unlock();

}

}

@Override

public boolean hasNext() {

return next != null;

}

@Override

public E next() {

if (next == null) {

throw new NoSuchElementException();

}

lastRet = next;

E x = nextItem;

advance();

return x;

}

@Override

public void remove() {

Node<E> n = lastRet;

if (n == null) {

throw new IllegalStateException();

}

lastRet = null;

final ReentrantLock lock = ResizableCapacityLinkedBlockingQueue.this.lock;

lock.lock();

try {

if (n.item != null) {

unlink(n);

}

} finally {

lock.unlock();

}

}

}

/**

* Forward iterator

*/

private class Itr extends AbstractItr {

@Override

Node<E> firstNode() {

return first;

}

@Override

Node<E> nextNode(Node<E> n) {

return n.next;

}

}

/**

* Descending iterator

*/

private class DescendingItr extends AbstractItr {

@Override

Node<E> firstNode() {

return last;

}

@Override

Node<E> nextNode(Node<E> n) {

return n.prev;

}

}

/**

* A customized variant of Spliterators.IteratorSpliterator

*/

static final class LBDSpliterator<E> implements Spliterator<E> {

static final int MAX_BATCH = 1 << 25; // max batch array size;

final ResizableCapacityLinkedBlockingQueue<E> queue;

Node<E> current; // current node; null until initialized

int batch; // batch size for splits

boolean exhausted; // true when no more nodes

long est; // size estimate

LBDSpliterator(ResizableCapacityLinkedBlockingQueue<E> queue) {

this.queue = queue;

this.est = queue.size();

}

@Override

public long estimateSize() {

return est;

}

@Override

public Spliterator<E> trySplit() {

Node<E> h;

final ResizableCapacityLinkedBlockingQueue<E> q = this.queue;

int b = batch;

int n = (b <= 0) ? 1 : (b >= MAX_BATCH) ? MAX_BATCH : b + 1;

if (!exhausted &&

((h = current) != null || (h = q.first) != null) &&

h.next != null) {

Object[] a = new Object[n];

final ReentrantLock lock = q.lock;

int i = 0;

Node<E> p = current;

lock.lock();

try {

if (p != null || (p = q.first) != null) {

do {

if ((a[i] = p.item) != null) {

++i;

}

} while ((p = p.next) != null && i < n);

}

} finally {

lock.unlock();

}

if ((current = p) == null) {

est = 0L;

exhausted = true;

} else if ((est -= i) < 0L) {

est = 0L;

}

if (i > 0) {

batch = i;

return Spliterators.spliterator

(a, 0, i, Spliterator.ORDERED | Spliterator.NONNULL |

Spliterator.CONCURRENT);

}

}

return null;

}

@Override

public void forEachRemaining(Consumer<? super E> action) {

if (action == null) {

throw new NullPointerException();

}

final ResizableCapacityLinkedBlockingQueue<E> q = this.queue;

final ReentrantLock lock = q.lock;

if (!exhausted) {

exhausted = true;

Node<E> p = current;

do {

E e = null;

lock.lock();

try {

if (p == null) {

p = q.first;

}

while (p != null) {

e = p.item;

p = p.next;

if (e != null) {

break;

}

}

} finally {

lock.unlock();

}

if (e != null) {

action.accept(e);

}

} while (p != null);

}

}

@Override

public boolean tryAdvance(Consumer<? super E> action) {

if (action == null) {

throw new NullPointerException();

}

final ResizableCapacityLinkedBlockingQueue<E> q = this.queue;

final ReentrantLock lock = q.lock;

if (!exhausted) {

E e = null;

lock.lock();

try {

if (current == null) {

current = q.first;

}

while (current != null) {

e = current.item;

current = current.next;

if (e != null) {

break;

}

}

} finally {

lock.unlock();

}

if (current == null) {

exhausted = true;

}

if (e != null) {

action.accept(e);

return true;

}

}

return false;

}

@Override

public int characteristics() {

return Spliterator.ORDERED | Spliterator.NONNULL |

Spliterator.CONCURRENT;

}

}

/**

* Returns a {@link Spliterator} over the elements in this deque.

*

* <p>The returned spliterator is

* <a href="package-summary.html#Weakly" rel="external nofollow" rel="external nofollow" rel="external nofollow" ><i>weakly consistent</i></a>.

*

* <p>The {@code Spliterator} reports {@link Spliterator#CONCURRENT},

* {@link Spliterator#ORDERED}, and {@link Spliterator#NONNULL}.

*

* @return a {@code Spliterator} over the elements in this deque

* @implNote The {@code Spliterator} implements {@code trySplit} to permit limited

* parallelism.

* @since 1.8

*/

@Override

public Spliterator<E> spliterator() {

return new LBDSpliterator<E>(this);

}

/**

* Saves this deque to a stream (that is, serializes it).

*

* @param s the stream

* @throws java.io.IOException if an I/O error occurs

* @serialData The capacity (int), followed by elements (each an

* {@code Object}) in the proper order, followed by a null

*/

private void writeObject(java.io.ObjectOutputStream s)

throws java.io.IOException {

final ReentrantLock lock = this.lock;

lock.lock();

try {

// Write out capacity and any hidden stuff

s.defaultWriteObject();

// Write out all elements in the proper order.

for (Node<E> p = first; p != null; p = p.next) {

s.writeObject(p.item);

}

// Use trailing null as sentinel

s.writeObject(null);

} finally {

lock.unlock();

}

}

/**

* Reconstitutes this deque from a stream (that is, deserializes it).

*

* @param s the stream

* @throws ClassNotFoundException if the class of a serialized object

* could not be found

* @throws java.io.IOException if an I/O error occurs

*/

private void readObject(java.io.ObjectInputStream s)

throws java.io.IOException, ClassNotFoundException {

s.defaultReadObject();

count = 0;

first = null;

last = null;

// Read in all elements and place in queue

for (; ; ) {

@SuppressWarnings("unchecked")

E item = (E) s.readObject();

if (item == null) {

break;

}

add(item);

}

}

}

- 自定义线程池,增加每个线程处理的耗时,以及平均耗时、最大耗时、最小耗时,以及输出监控日志信息等等;

/**

* 线程池监控类

*

* @author wangtongzhou

* @since 2022-02-23 07:27

*/

public class ThreadPoolMonitor extends ThreadPoolExecutor {

private static final Logger LOGGER = LoggerFactory.getLogger(ThreadPoolMonitor.class);

/**

* 默认拒绝策略

*/

private static final RejectedExecutionHandler defaultHandler = new AbortPolicy();

/**

* 线程池名称,一般以业务名称命名,方便区分

*/

private String poolName;

/**

* 最短执行时间

*/

private Long minCostTime;

/**

* 最长执行时间

*/

private Long maxCostTime;

/**

* 总的耗时

*/

private AtomicLong totalCostTime = new AtomicLong();

private ThreadLocal<Long> startTimeThreadLocal = new ThreadLocal<>();

/**

* 调用父类的构造方法,并初始化HashMap和线程池名称

*

* @param corePoolSize 线程池核心线程数

* @param maximumPoolSize 线程池最大线程数

* @param keepAliveTime 线程的最大空闲时间

* @param unit 空闲时间的单位

* @param workQueue 保存被提交任务的队列

* @param poolName 线程池名称

*/

public ThreadPoolMonitor(int corePoolSize, int maximumPoolSize, long keepAliveTime,

TimeUnit unit, BlockingQueue<Runnable> workQueue, String poolName) {

this(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue,

Executors.defaultThreadFactory(), poolName);

}

/**

* 调用父类的构造方法,并初始化HashMap和线程池名称

*

* @param corePoolSize 线程池核心线程数

* @param maximumPoolSize 线程池最大线程数

* @param keepAliveTime 线程的最大空闲时间

* @param unit 空闲时间的单位

* @param workQueue 保存被提交任务的队列

* @param

* @param poolName 线程池名称

*/

public ThreadPoolMonitor(int corePoolSize, int maximumPoolSize, long keepAliveTime,

TimeUnit unit, BlockingQueue<Runnable> workQueue, RejectedExecutionHandler handler, String poolName) {

this(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue,

Executors.defaultThreadFactory(), handler, poolName);

}

/**

* 调用父类的构造方法,并初始化HashMap和线程池名称

*

* @param corePoolSize 线程池核心线程数

* @param maximumPoolSize 线程池最大线程数

* @param keepAliveTime 线程的最大空闲时间

* @param unit 空闲时间的单位

* @param workQueue 保存被提交任务的队列

* @param threadFactory 线程工厂

* @param poolName 线程池名称

*/

public ThreadPoolMonitor(int corePoolSize, int maximumPoolSize, long keepAliveTime,

TimeUnit unit, BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory, String poolName) {

super(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory, defaultHandler);

this.poolName = poolName;

}

/**

* 调用父类的构造方法,并初始化HashMap和线程池名称

*

* @param corePoolSize 线程池核心线程数

* @param maximumPoolSize 线程池最大线程数

* @param keepAliveTime 线程的最大空闲时间

* @param unit 空闲时间的单位

* @param workQueue 保存被提交任务的队列

* @param threadFactory 线程工厂

* @param handler 拒绝策略

* @param poolName 线程池名称

*/

public ThreadPoolMonitor(int corePoolSize, int maximumPoolSize, long keepAliveTime,

TimeUnit unit, BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory, RejectedExecutionHandler handler, String poolName) {

super(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory, handler);

this.poolName = poolName;

}

/**

* 线程池延迟关闭时(等待线程池里的任务都执行完毕),统计线程池情况

*/

@Override

public void shutdown() {

// 统计已执行任务、正在执行任务、未执行任务数量

LOGGER.info("{} 关闭线程池, 已执行任务: {}, 正在执行任务: {}, 未执行任务数量: {}",

this.poolName, this.getCompletedTaskCount(), this.getActiveCount(), this.getQueue().size());

super.shutdown();

}

/**

* 线程池立即关闭时,统计线程池情况

*/

@Override

public List<Runnable> shutdownNow() {

// 统计已执行任务、正在执行任务、未执行任务数量

LOGGER.info("{} 立即关闭线程池,已执行任务: {}, 正在执行任务: {}, 未执行任务数量: {}",

this.poolName, this.getCompletedTaskCount(), this.getActiveCount(), this.getQueue().size());

return super.shutdownNow();

}

/**

* 任务执行之前,记录任务开始时间

*/

@Override

protected void beforeExecute(Thread t, Runnable r) {

startTimeThreadLocal.set(System.currentTimeMillis());

}

/**

* 任务执行之后,计算任务结束时间

*/

@Override

protected void afterExecute(Runnable r, Throwable t) {

long costTime = System.currentTimeMillis() - startTimeThreadLocal.get();

startTimeThreadLocal.remove();

maxCostTime = maxCostTime > costTime ? maxCostTime : costTime;

if (getCompletedTaskCount() == 0) {

minCostTime = costTime;

}

minCostTime = minCostTime < costTime ? minCostTime : costTime;

totalCostTime.addAndGet(costTime);

LOGGER.info("{}-pool-monitor: " +

"任务耗时: {} ms, 初始线程数: {}, 核心线程数: {}, 执行的任务数量: {}, " +

"已完成任务数量: {}, 任务总数: {}, 队列里缓存的任务数量: {}, 池中存在的最大线程数: {}, " +

"最大允许的线程数: {}, 线程空闲时间: {}, 线程池是否关闭: {}, 线程池是否终止: {}",

this.poolName,

costTime, this.getPoolSize(), this.getCorePoolSize(), this.getActiveCount(),

this.getCompletedTaskCount(), this.getTaskCount(), this.getQueue().size(), this.getLargestPoolSize(),

this.getMaximumPoolSize(), this.getKeepAliveTime(TimeUnit.MILLISECONDS), this.isShutdown(), this.isTerminated());

}

public Long getMinCostTime() {

return minCostTime;

}

public Long getMaxCostTime() {

return maxCostTime;

}

public long getAverageCostTime(){

if(getCompletedTaskCount()==0||totalCostTime.get()==0){

return 0;

}

return totalCostTime.get()/getCompletedTaskCount();

}

/**

* 生成线程池所用的线程,改写了线程池默认的线程工厂,传入线程池名称,便于问题追踪

*/

static class MonitorThreadFactory implements ThreadFactory {

private static final AtomicInteger poolNumber = new AtomicInteger(1);

private final ThreadGroup group;

private final AtomicInteger threadNumber = new AtomicInteger(1);

private final String namePrefix;

/**

* 初始化线程工厂

*

* @param poolName 线程池名称

*/

MonitorThreadFactory(String poolName) {

SecurityManager s = System.getSecurityManager();

group = Objects.nonNull(s) ? s.getThreadGroup() : Thread.currentThread().getThreadGroup();

namePrefix = poolName + "-pool-" + poolNumber.getAndIncrement() + "-thread-";

}

@Override

public Thread newThread(Runnable r) {

Thread t = new Thread(group, r, namePrefix + threadNumber.getAndIncrement(), 0);

if (t.isDaemon()) {

t.setDaemon(false);

}

if (t.getPriority() != Thread.NORM_PRIORITY) {

t.setPriority(Thread.NORM_PRIORITY);

}

return t;

}

}

}

- 动态修改线程池的类,通过Spring的监听器监控配置刷新方法,实现动态更新线程池的参数;

/**

* 动态刷新线程池

*

* @author wangtongzhou

* @since 2022-03-13 14:13

*/

@Component

@Slf4j

public class DynamicThreadPoolManager {

@Autowired

private DynamicThreadPoolProperties dynamicThreadPoolProperties;

/**

* 存储线程池对象

*/

public Map<String, ThreadPoolMonitor> threadPoolExecutorMap = new HashMap<>();

public Map<String, ThreadPoolMonitor> getThreadPoolExecutorMap() {

return threadPoolExecutorMap;

}

/**

* 初始化线程池

*/

@PostConstruct

public void init() {

createThreadPools(dynamicThreadPoolProperties);

}

/**

* 初始化线程池的创建

*

* @param dynamicThreadPoolProperties

*/

private void createThreadPools(DynamicThreadPoolProperties dynamicThreadPoolProperties) {

dynamicThreadPoolProperties.getExecutors().forEach(config -> {

if (!threadPoolExecutorMap.containsKey(config.getThreadPoolName())) {

ThreadPoolMonitor threadPoolMonitor = new ThreadPoolMonitor(

config.getCorePoolSize(),

config.getMaxPoolSize(),

config.getKeepAliveTime(),

config.getUnit(),

new ResizableCapacityLinkedBlockingQueue<>(config.getQueueCapacity()),

RejectedExecutionHandlerEnum.getRejectedExecutionHandler(config.getRejectedExecutionType()),

config.getThreadPoolName()

);

threadPoolExecutorMap.put(config.getThreadPoolName(),

threadPoolMonitor);

}

});

}

/**

* 调整线程池

*

* @param dynamicThreadPoolProperties

*/

private void changeThreadPools(DynamicThreadPoolProperties dynamicThreadPoolProperties) {

dynamicThreadPoolProperties.getExecutors().forEach(config -> {

ThreadPoolExecutor threadPoolExecutor = threadPoolExecutorMap.get(config.getThreadPoolName());

if (Objects.nonNull(threadPoolExecutor)) {

threadPoolExecutor.setCorePoolSize(config.getCorePoolSize());

threadPoolExecutor.setMaximumPoolSize(config.getMaxPoolSize());

threadPoolExecutor.setKeepAliveTime(config.getKeepAliveTime(), config.getUnit());

threadPoolExecutor.setRejectedExecutionHandler(RejectedExecutionHandlerEnum.getRejectedExecutionHandler(config.getRejectedExecutionType()));

BlockingQueue<Runnable> queue = threadPoolExecutor.getQueue();

if (queue instanceof ResizableCapacityLinkedBlockingQueue) {

((ResizableCapacityLinkedBlockingQueue<Runnable>) queue).setCapacity(config.getQueueCapacity());

}

}

});

}

@EventListener

public void envListener(EnvironmentChangeEvent event) {

log.info("配置发生变更" + event);

changeThreadPools(dynamicThreadPoolProperties);

}

}

- DynamicThreadPoolPropertiesController对外暴露两个方法,第一个通过ContextRefresher提供对外刷新配置的接口,实现及时更新配置信息,第二提供一个查询接口的方法,

/**

* 动态修改线程池参数

*

* @author wangtongzhou

* @since 2022-03-13 17:27

*/

@RestController

public class DynamicThreadPoolPropertiesController {

@Autowired

private ContextRefresher contextRefresher;

private DynamicThreadPoolProperties dynamicThreadPoolProperties;

private DynamicThreadPoolManager dynamicThreadPoolManager;

@PostMapping("/threadPool/properties")

public void update() {

ThreadPoolProperties threadPoolProperties =

dynamicThreadPoolProperties.getExecutors().get(0);

threadPoolProperties.setCorePoolSize(20);

threadPoolProperties.setMaxPoolSize(50);

threadPoolProperties.setQueueCapacity(200);

threadPoolProperties.setRejectedExecutionType("CallerRunsPolicy");

contextRefresher.refresh();

}

@GetMapping("/threadPool/properties")

public Map<String, Object> queryThreadPoolProperties() {

Map<String, Object> metricMap = new HashMap<>();

List<Map> threadPools = new ArrayList<>();

dynamicThreadPoolManager.getThreadPoolExecutorMap().forEach((k, v) -> {

ThreadPoolMonitor threadPoolMonitor = (ThreadPoolMonitor) v;

Map<String, Object> poolInfo = new HashMap<>();

poolInfo.put("thread.pool.name", k);

poolInfo.put("thread.pool.core.size", threadPoolMonitor.getCorePoolSize());

poolInfo.put("thread.pool.largest.size", threadPoolMonitor.getLargestPoolSize());

poolInfo.put("thread.pool.max.size", threadPoolMonitor.getMaximumPoolSize());

poolInfo.put("thread.pool.thread.count", threadPoolMonitor.getPoolSize());

poolInfo.put("thread.pool.max.costTime", threadPoolMonitor.getMaxCostTime());

poolInfo.put("thread.pool.average.costTime", threadPoolMonitor.getAverageCostTime());

poolInfo.put("thread.pool.min.costTime", threadPoolMonitor.getMinCostTime());

poolInfo.put("thread.pool.active.count", threadPoolMonitor.getActiveCount());

poolInfo.put("thread.pool.completed.taskCount", threadPoolMonitor.getCompletedTaskCount());

poolInfo.put("thread.pool.queue.name", threadPoolMonitor.getQueue().getClass().getName());

poolInfo.put("thread.pool.rejected.name", threadPoolMonitor.getRejectedExecutionHandler().getClass().getName());

poolInfo.put("thread.pool.task.count", threadPoolMonitor.getTaskCount());

threadPools.add(poolInfo);

});

metricMap.put("threadPools", threadPools);

return metricMap;

}

整体上的流程到这里就完成了,算是一个Demo版,对于该组件更深入的思考我认为还可以做以下三件事情:

- 应该以starter的形式嵌入到应用,通过判断启动类加载的Appllo、Nacos还是默认实现;

- 对外可以Push、也可以是日志,还可以支持各种库,提供丰富的输出形式,这个

到此这篇关于基于Spring Boot的线程池监控方案的文章就介绍到这了,更多相关Spring Boot的线程池监控内容请搜索我们以前的文章或继续浏览下面的相关文章希望大家以后多多支持我们!