mongodb使用docker搭建replicaSet集群与变更监听(最新推荐)

目录

- 安装环境

- docker方式mongodb集群安装

- 目录与key准备

- 运行mongodb

- 配置节点

- 官方客户端验证

- 变更监听

在mongodb如果需要启用变更监听功能(watch),mongodb需要在replicaSet或者cluster方式下运行。

replicaSet和cluster从部署难度相比,replicaSet要简单许多。如果所存储的数据量规模不算太大的情况下,那么使用replicaSet方式部署mongodb是一个不错的选择。

安装环境

mongodb版本:mongodb-6.0.5

两台主机:主机1(192.168.1.11)、主机2(192.168.1.12)

docker方式mongodb集群安装

在主机1和主机2上安装好docker,并确保两台主机能正常通信

目录与key准备

在启动mongodb前,先准备好对应的目录与访问key

#在所有主机都创建用于存储mongodb数据的文件夹

mkdir -p ~/mongo-data/{data,key,backup}

#设置key文件,用于在集群机器间互相访问,各主机的key需要保持一致

cd ~/mongo-data

#在某一节点创建key

openssl rand -base64 123 > key/mongo-rs.key

sudo chown 999 key/mongo-rs.key

#不能是755, 权限太大不行.

sudo chmod 600 key/mongo-rs.key

#将key复制到他节点

scp key/mongo-rs.key root@192.168.1.12:/root/mongo-data/key

以上操作在各主机中创建了 ~/mongo-data/{data,key,backup} 这3个目录,且mongo-rs.key的内容一致。

运行mongodb

执行下列命令,启动mongodb

sudo docker run --name mongo --network=host -p 27017:27017 -v ~/mongo-data/data:/data/db -v ~/mongo-data/backup:/data/backup -v ~/mongo-data/key:/data/key -v /etc/localtime:/etc/localtime -e MONGO_INITDB_ROOT_USERNAME=admin -e MONGO_INITDB_ROOT_PASSWORD=123456 -d mongo:6.0.5 --replSet haiyangReplset --auth --keyFile /data/key/mongo-rs.key --bind_ip_all

上面主要将27017端口映射到主机中,并设了admin的默认密码为123456。

–replSet为指定开启replicaSet,后面跟的为副本集的名称。

配置节点

进入某一节点,进行集群配置

sudo docker exec -it mongo bash

mongosh

初始化集群前先登录验证超级管理员admin

use admin

db.auth(“admin”,“123456”)

再执行以下命令进行初始化

var config={

_id:"haiyangReplset",

members:[

{_id:0,host:"192.168.1.11:27017"},

{_id:1,host:"192.168.1.12:27017"},

]};

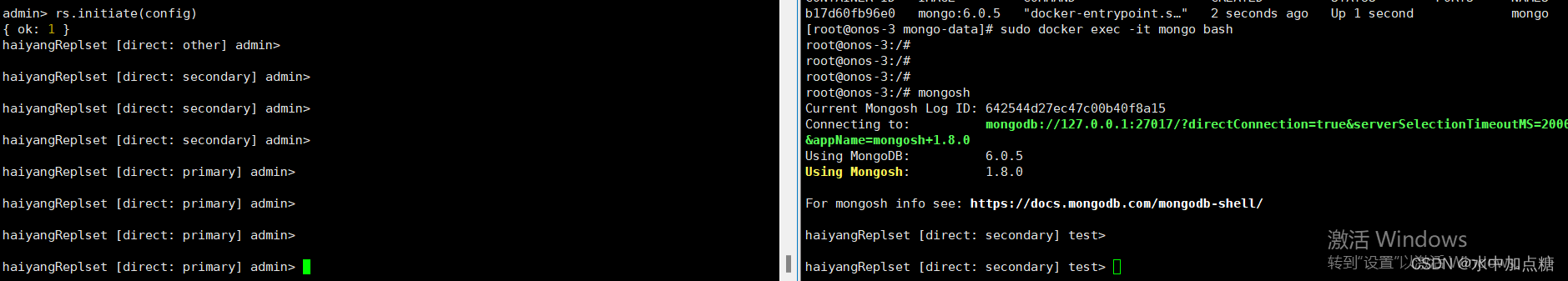

rs.initiate(config)

执行成功后,可以看到一个节点为主节点,另一个节点为从节点

其他相关命令

#查看副本集状态

rs.status()

#查看副本集配置

rs.conf()

#添加节点

rs.add( { host: "ip:port"} )

#删除节点

rs.remove('ip:port')

官方客户端验证

在mongodb安装好后,再用客户端连接验证一下。

官方mongodb的客户端下载地址为:https://www.mongodb.com/try/download/compass

下载完毕后,在客户端中新建连接。

在本例中,则mongodb的连接地址为:

mongodb://admin:123456@192.168.1.11:27017,192.168.1.12:27017/?authMechanism=DEFAULT&authSource=admin&replicaSet=haiyangReplset

库与监控信息一目了然~

变更监听

对于mongodb操作的api在mongodb的官网有比较完备的文档,java的文档连接为:https://www.mongodb.com/docs/drivers/java/sync/v4.9/

这里试一下mongodb中一个比较强悍的功能,记录的变更监听。

用这项功能来做一些审计的场景则会非常方便。

官方链接为:https://www.mongodb.com/docs/drivers/java/sync/v4.9/usage-examples/watch/

这里以java客户端为例写个小demo,试一下对于mongodb中集合的创建及watch功能。

package io.github.puhaiyang;

import com.google.common.collect.Lists;

import com.mongodb.client.*;

import org.apache.commons.lang3.StringUtils;

import org.bson.Document;

import org.bson.conversions.Bson;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import java.util.Objects;

import java.util.Optional;

import java.util.Scanner;

import java.util.concurrent.CompletableFuture;

/**

* @author puhaiyang

* @since 2023/3/30 20:01

* MongodbWatchTestMain

*/

public class MongodbWatchTestMain {

public static void main(String[] args) throws Exception {

String uri = "mongodb://admin:123456@192.168.1.11:27017,192.168.1.12:27017/?replicaSet=haiyangReplset";

MongoClient mongoClient = MongoClients.create(uri);

MongoDatabase mongoDatabase = mongoClient.getDatabase("my-test-db");

String myTestCollectionName = "myTestCollection";

//获取出collection

MongoCollection<Document> mongoCollection = initCollection(mongoDatabase, myTestCollectionName);

//进行watch

CompletableFuture.runAsync(() -> {

while (true) {

List<Bson> pipeline = Lists.newArrayList(

Aggregates.match(Filters.in("ns.coll", myTestCollectionName)),

Aggregates.match(Filters.in("operationType", Arrays.asList("insert", "update", "replace", "delete")))

);

ChangeStreamIterable<Document> changeStream = mongoDatabase.watch(pipeline)

.fullDocument(FullDocument.UPDATE_LOOKUP)

.fullDocumentBeforeChange(FullDocumentBeforeChange.WHEN_AVAILABLE);

changeStream.forEach(event -> {

String collectionName = Objects.requireNonNull(event.getNamespace()).getCollectionName();

System.out.println("--------> event:" + event.toString());

});

}

});

//数据变更测试

{

Thread.sleep(3_000);

InsertOneResult insertResult = mongoCollection.insertOne(new Document("test", "sample movie document"));

System.out.println("Success! Inserted document id: " + insertResult.getInsertedId());

UpdateResult updateResult = mongoCollection.updateOne(new Document("test", "sample movie document"), Updates.set("field2", "sample movie document update"));

System.out.println("Updated " + updateResult.getModifiedCount() + " document.");

DeleteResult deleteResult = mongoCollection.deleteOne(new Document("field2", "sample movie document update"));

System.out.println("Deleted " + deleteResult.getDeletedCount() + " document.");

}

new Scanner(System.in).next();

}

private static MongoCollection<Document> initCollection(MongoDatabase mongoDatabase, String myTestCollectionName) {

ArrayList<Document> existsCollections = mongoDatabase.listCollections().into(new ArrayList<>());

Optional<Document> existsCollInfoOpl = existsCollections.stream().filter(doc -> StringUtils.equals(myTestCollectionName, doc.getString("name"))).findFirst();

existsCollInfoOpl.ifPresent(collInfo -> {

//确保开启了changeStreamPreAndPost

Document changeStreamPreAndPostImagesEnable = collInfo.get("options", Document.class).get("changeStreamPreAndPostImages", Document.class);

if (changeStreamPreAndPostImagesEnable != null && !changeStreamPreAndPostImagesEnable.getBoolean("enabled")) {

Document mod = new Document();

mod.put("collMod", myTestCollectionName);

mod.put("changeStreamPreAndPostImages", new Document("enabled", true));

mongoDatabase.runCommand(mod);

}

});

if (!existsCollInfoOpl.isPresent()) {

CreateCollectionOptions collectionOptions = new CreateCollectionOptions();

//创建collection时开启ChangeStreamPreAndPostImages

collectionOptions.changeStreamPreAndPostImagesOptions(new ChangeStreamPreAndPostImagesOptions(true));

mongoDatabase.createCollection(myTestCollectionName, collectionOptions);

}

return mongoDatabase.getCollection(myTestCollectionName);

}

}

输出结果如下:

--------> event:ChangeStreamDocument{ operationType=insert, resumeToken={"_data": "8264255A0F000000022B022C0100296E5A10046A3E3757D6A64DF59E6D94DC56A9210446645F6964006464255A105A91F005CFB2E6D20004"}, namespace=my-test-db.myTestCollection, destinationNamespace=null, fullDocument=Document{{_id=64255a105a91f005cfb2e6d2, test=sample movie document}}, fullDocumentBeforeChange=null, documentKey={"_id": {"$oid": "64255a105a91f005cfb2e6d2"}}, clusterTime=Timestamp{value=7216272998402097154, seconds=1680169487, inc=2}, updateDescription=null, txnNumber=null, lsid=null, wallTime=BsonDateTime{value=1680169487686}}

Success! Inserted document id: BsonObjectId{value=64255a105a91f005cfb2e6d2}

Updated 1 document.

--------> event:ChangeStreamDocument{ operationType=update, resumeToken={"_data": "8264255A0F000000032B022C0100296E5A10046A3E3757D6A64DF59E6D94DC56A9210446645F6964006464255A105A91F005CFB2E6D20004"}, namespace=my-test-db.myTestCollection, destinationNamespace=null, fullDocument=Document{{_id=64255a105a91f005cfb2e6d2, test=sample movie document, field2=sample movie document update}}, fullDocumentBeforeChange=Document{{_id=64255a105a91f005cfb2e6d2, test=sample movie document}}, documentKey={"_id": {"$oid": "64255a105a91f005cfb2e6d2"}}, clusterTime=Timestamp{value=7216272998402097155, seconds=1680169487, inc=3}, updateDescription=UpdateDescription{removedFields=[], updatedFields={"field2": "sample movie document update"}, truncatedArrays=[], disambiguatedPaths=null}, txnNumber=null, lsid=null, wallTime=BsonDateTime{value=1680169487708}}

--------> event:ChangeStreamDocument{ operationType=delete, resumeToken={"_data": "8264255A0F000000042B022C0100296E5A10046A3E3757D6A64DF59E6D94DC56A9210446645F6964006464255A105A91F005CFB2E6D20004"}, namespace=my-test-db.myTestCollection, destinationNamespace=null, fullDocument=null, fullDocumentBeforeChange=Document{{_id=64255a105a91f005cfb2e6d2, test=sample movie document, field2=sample movie document update}}, documentKey={"_id": {"$oid": "64255a105a91f005cfb2e6d2"}}, clusterTime=Timestamp{value=7216272998402097156, seconds=1680169487, inc=4}, updateDescription=null, txnNumber=null, lsid=null, wallTime=BsonDateTime{value=1680169487721}}

Deleted 1 document.

到此这篇关于mongodb使用docker搭建replicaSet集群与变更监听的文章就介绍到这了,更多相关mongodb搭建replicaSet集群内容请搜索我们以前的文章或继续浏览下面的相关文章希望大家以后多多支持我们!